https://neurosciencenews.com/ketamine-antidepressant-speed-neurogenesis-20716/

Day: 06/01/2022

Harnessing the Immune System to Treat Traumatic Brain Injury

Summary: A new mouse study identifies a targeted delivery method system that boosts the number of specialized anti-inflammatory immune cells within the brain to areas restricted by brain inflammation and damage. The system helped to protect against apoptosis associated with brain injury, stroke, and multiple sclerosis.

Source: Babraham Institute

A therapeutic method for harnessing the body’s immune system to protect against brain damage is published today by researchers from the Babraham Institute’s Immunology research program.

The collaboration between Professor Adrian Liston (Babraham Institute) and Professor Matthew Holt (VIB and KU Leuven; i3S-University of Porto) has produced a targeted delivery system for boosting the numbers of specialized anti-inflammatory immune cells specifically within the brain to restrict brain inflammation and damage.

Their brain-specific delivery system protected against brain cell death following brain injury, stroke and in a model of multiple sclerosis.

The research is published today in the journal Nature Immunology.

Traumatic brain injury, like that caused during a car accident or a fall, is a significant cause of death worldwide and can cause long-lasting cognitive impairment and dementia in people who survive.

A leading cause of this cognitive impairment is the inflammatory response to the injury, with swelling of the brain causing permanent damage.

While inflammation in other parts of the body can be addressed therapeutically, but in the brain it is problematic due to the presence of the blood-brain barrier, which prevents common anti-inflammatory molecules from getting to the site of trauma.

Prof. Liston, a senior group leader in the Babraham Institute’s Immunology program, explained their approach: “Our bodies have their own anti-inflammatory response, regulatory T cells, which have the ability to sense inflammation and produce a cocktail of natural anti-inflammatories. Unfortunately there are very few of these regulatory T cells in the brain, so they are overwhelmed by the inflammation following an injury.

“We sought to design a new therapeutic to boost the population of regulatory T cells in the brain, so that they could manage inflammation and reduce the damage caused by traumatic injury.”

The research team found that regulatory T cell numbers were low in the brain because of a limited supply of the crucial survival molecule interleukin 2, also known as IL2. Levels of IL2 are low in the brain compared to the rest of the body as it can’t pass the blood-brain barrier.

Together the team devised a new therapeutic approach that allows more IL2 to be made by brain cells, thereby creating the conditions needed by regulatory T cells to survive. A ‘gene delivery’ system based on an engineered adeno-associated viral vector (AAV) was used: this system can actually cross an intact blood brain barrier and deliver the DNA needed for the brain to produce more IL2 production.

Commenting on the work, Prof. Holt, from VIB and KU Leuven, says that “for years, the blood-brain barrier has seemed like an insurmountable hurdle to the efficient delivery of biologics to the brain.

“Our work, using the latest in viral vector technology, proves that this is no longer the case; in fact, it is possible that under certain circumstances, the blood-brain barrier may actually prove to be therapeutically beneficial, serving to prevent ‘leak’ of therapeutics into the rest of the body.”

The new therapeutic designed by the research teams was able to boost the levels of the survival molecule IL2 in the brain, up to the same levels found in the blood. This allowed the number of regulatory T cells to build up in the brain, up to 10-fold higher than normal.

To test the efficacy of the treatment in a mouse model that closely resembles traumatic brain injury accidents, mice were given carefully controlled brain impacts and then treated with the IL-2 gene delivery system.

The scientists found that the treatment was effective at reducing the amount of brain damage following the injury, assessed by comparing both the loss of brain tissue and the ability of the mice to perform in cognitive tests.

Lead author, Dr. Lidia Yshii, Associate Professor at KU Leuven, explained: “Seeing the brains of the mice after the first experiment was a ‘eureka moment’—we could immediately see that the treatment reduced the size of the injury lesion.”

Recognizing the wider potential of a drug capable of controlling brain inflammation, the researchers also tested the effectiveness of the approach in experimental mouse models of multiple sclerosis and stroke.

In the model of multiple sclerosis, treating mice during the early symptoms prevented severe paralysis and allowed the mice to recover faster. In a model of stroke, mice treated with the IL2 gene delivery system after a primary stroke were partially protected from secondary strokes occurring two weeks later.

In a follow-up study, still undergoing peer review, the research team also demonstrated that the treatment was effective at preventing cognitive decline in aging mice.

“By understanding and manipulating the immune response in the brain, we were able to develop a gene delivery system for IL2 as a potential treatment for neuroinflammation.

“With tens of millions of people affected every year, and few treatment options, this has real potential to help people in need. We hope that this system will soon enter clinical trials, essential to test whether the treatment also works in patients,” said Prof. Liston.

Dr. Ed Needham, a neurocritical care consultant at Addenbrooke’s Hospital who was not a part of the study, commented on the clinical relevance of these results: “There is an urgent clinical need to develop treatments which can prevent secondary injury that occurs after a traumatic brain injury. Importantly these treatments have to be safe for use in critically unwell patients who are at high risk of life-threatening infections.

“Current anti-inflammatory drugs act on the whole immune system, and may therefore increase patients’ susceptibility to such infections.

“The exciting progress in this study is that, not only can the treatment successfully reduce the brain damage caused by inflammation, but it can do so without affecting the rest of the body’s immune system, thereby preserving the natural defenses needed to survive critical illness.”

Abstract

Astrocyte-targeted gene delivery of interleukin 2 specifically increases brain-resident regulatory T cell numbers and protects against pathological neuroinflammation

The ability of immune-modulating biologics to prevent and reverse pathology has transformed recent clinical practice. Full utility in the neuroinflammation space, however, requires identification of both effective targets for local immune modulation and a delivery system capable of crossing the blood–brain barrier.

The recent identification and characterization of a small population of regulatory T (Treg) cells resident in the brain presents one such potential therapeutic target.

Here, we identified brain interleukin 2 (IL-2) levels as a limiting factor for brain-resident Treg cells.

We developed a gene-delivery approach for astrocytes, with a small-molecule on-switch to allow temporal control, and enhanced production in reactive astrocytes to spatially direct delivery to inflammatory sites. Mice with brain-specific IL-2 delivery were protected in traumatic brain injury, stroke and multiple sclerosis models, without impacting the peripheral immune system.

These results validate brain-specific IL-2 gene delivery as effective protection against neuroinflammation, and provide a versatile platform for delivery of diverse biologics to neuroinflammatory patients.

Early Sound Exposure in the Womb Shapes the Auditory System

Summary: Muffled sounds experienced in the womb prime the brain’s ability to interpret some sounds and may be key for auditory development.

Source: MIT

Inside the womb, fetuses can begin to hear some sounds around 20 weeks of gestation. However, the input they are exposed to is limited to low-frequency sounds because of the muffling effect of the amniotic fluid and surrounding tissues.

A new MIT-led study suggests that this degraded sensory input is beneficial, and perhaps necessary, for auditory development.

Using simple computer models of the human auditory processing, the researchers showed that initially limiting input to low-frequency sounds as the models learned to perform certain tasks actually improved their performance.

Along with an earlier study from the same team, which showed that early exposure to blurry faces improves computer models’ subsequent generalization ability to recognize faces, the findings suggest that receiving low-quality sensory input may be key to some aspects of brain development.

“Instead of thinking of the poor quality of the input as a limitation that biology is imposing on us, this work takes the standpoint that perhaps nature is being clever and giving us the right kind of impetus to develop the mechanisms that later prove to be very beneficial when we are asked to deal with challenging recognition tasks,” says Pawan Sinha, a professor of vision and computational neuroscience in MIT’s Department of Brain and Cognitive Sciences, who led the research team.

In the new study, the researchers showed that exposing a computational model of the human auditory system to a full range of frequencies from the beginning led to worse generalization performance on tasks that require absorbing information over longer periods of time — for example, identifying emotions from a voice clip.

From the applied perspective, the findings suggest that babies born prematurely may benefit from being exposed to lower-frequency sounds rather than the full spectrum of frequencies that they now hear in neonatal intensive care units, the researchers say.

Marin Vogelsang and Lukas Vogelsang, currently both students at EPFL Lausanne, are the lead authors of the study, which appears in the journal Developmental Science. Sidney Diamond, a retired neurologist and now an MIT research affiliate, is also an author of the paper.

Low-quality input

Several years ago, Sinha and his colleagues became interested in studying how low-quality sensory input affects the brain’s subsequent development. This question arose in part after the researchers had the opportunity to meet and study a young boy who had been born with cataracts that were not removed until he was four years old.

This boy, who was born in China, was later adopted by an American family and referred to Sinha’s lab at the age of 10. Studies revealed that his vision was nearly normal, with one notable exception: He performed very poorly in recognizing faces. Other studies of children born blind have also revealed deficits in face recognition after their sight was restored.

The researchers hypothesized that this impairment might be a result of missing out on some of the low-quality visual input that babies and young children normally receive. When babies are born, their visual acuity is very poor — around 20/800, 1/40 the strength of normal 20/20 vision. This is in part because of the lower packing density of photoreceptors in the newborn retina. As the baby grows, the receptors become more densely packed and visual acuity improves.

“The theory we proposed was that this initial period of blurry or degraded vision was very important. Because everything is so blurry, the brain needs to integrate over larger areas of the visual field,” Sinha says.

To explore this theory, the researchers used a type of computational model of vision known as a convolutional neural network. They trained the model to recognize faces, giving it either blurry input followed later by clear input, or clear input from the beginning. They found that the models that received fuzzy input early on showed superior generalization performance on facial recognition tasks.

Additionally, the neural networks’ receptive fields — the size of the visual area that they cover — were larger than the receptive fields in models trained on the clear input from the beginning.

After that study was published in 2018, the researchers wanted to explore whether this phenomenon could also be seen in other types of sensory systems. For audition, the timeline of development is slightly different, as full-term babies are born with nearly normal hearing across the sound spectrum. However, during the prenatal period, while the auditory system is still developing, babies are exposed to degraded sound quality in the womb.

To examine the effects of that degraded input, the researchers trained a computational model of human audition to perform a task that requires integrating information over long time periods — identifying emotion from a voice clip. As the models learned the task, the researchers fed them one of four different types of auditory input: low frequency only, full frequency only, low frequency followed by full frequency, and full frequency followed by low frequency.

Low frequency followed by full frequency most closely mimics what developing infants are exposed to, and the researchers found that the computer models exposed to that scenario exhibited the most generalized performance profile on the emotion recognition task. Those models also generated larger temporal receptive fields, meaning that they were able to analyze sounds occurring over a longer time period.

This suggests, just like the vision study, that degraded input early in development actually promotes better sensory integration abilities later in life.

“It supports the idea that starting with very limited information, and then getting better and better over time might actually be a feature of the system rather than being a bug,” Lukas Vogelsang says.

Effects of premature birth

Previous research done by other labs has found that babies born prematurely do show impairments in processing low-frequency sounds. They perform worse than full-term babies on tests of emotion classification, later in life. The MIT team’s computational findings suggest that these impairments may be the result of missing out on some of the low-quality sensory input they would normally receive in the womb.

“If you provide full-frequency input right from the get-go, then you are taking away the impetus on the part of the brain to try to discover long range or extended temporal structure. It can get by with just local temporal structure,” Sinha says. “Presumably that is what immediate immersion in full-frequency soundscapes does to the brain of a prematurely born child.”

The researchers suggest that for babies born prematurely, it could be beneficial to expose them to primarily low-frequency sounds after birth, to mimic the womb-like conditions they’re missing out on.

The research team is now exploring other areas in which this kind of degraded input may be beneficial to brain development. These include aspects of vision, such as color perception, as well as qualitatively different domains such as linguistic development.

“We have been surprised by how consistent the narrative and the hypothesis of the experimental results are, to this idea of initial degradations being adaptive for developmental purposes,” Sinha says.

“I feel that this work illustrates the gratifying surprises science offers us. We did not expect that the ideas which germinated from our work with congenitally blind children would have much bearing on our thinking about audition. But, in fact, there appears to be a beautiful conceptual commonality between the two domains. And, maybe that common thread goes even beyond these two sensory modalities. There are clearly a host of exciting research questions ahead of us.”

Funding: The research was funded by the National Institutes of Health.

Abstract

Prenatal auditory experience and its sequelae

Towards the end of the second trimester of gestation, a human fetus is able to register environmental sounds. This in-utero auditory experience is characterized by comprising strongly low-pass filtered versions of sounds from the external world.

Here, we present computational tests of the hypothesis that this early exposure to severely degraded auditory inputs serves an adaptive purpose – it may induce the neural development of extended temporal integration.

Such integration can facilitate the detection of information carried by low-frequency variations in the auditory signal, including emotional or other prosodic content.

To test this prediction, we characterized the impact of several training regimens, biomimetic and otherwise, on a computational model system trained and tested on the task of emotion recognition.

We find that training with an auditory trajectory recapitulating that of a neurotypical infant in the pre-to-post-natal period results in temporally-extended receptive field structures and yields the best subsequent accuracy and generalization performance on the task of emotion recognition.

This strongly suggests that the progression from low-pass-filtered to full-frequency inputs is likely to be an adaptive feature of our development, conferring significant benefits to later auditory processing abilities relying on temporally-extended analyses.

Additionally, this finding can help explain some of the auditory impairments associated with preterm births, suggests guidelines for the design of auditory environments in neonatal care units, and points to enhanced training procedures for computational models.

- A human fetus’ auditory experience comprises strongly low-pass-filtered versions of sounds in the environment. We examine the potential consequences of these degradations of incident sounds.

- Results of our computational simulations strongly suggest that, rather than being epiphenomenal limitations, these degradations are likely to be an adaptive feature of our development.

- These findings have implications for auditory impairments associated with preterm births, the design of auditory environments in neonatal care units, and enhanced computational training procedures.

Synaptic Connectivity of a Novel Cell Population in the Striatum

Summary: Researchers characterize a novel neural population within the striatum that appears to be responsible for the interplay between acetylcholine and GABA.

Source: Karolinska Institute

A new study from the Department of Neuroscience at Karolinska Institutet characterizes a novel neuronal population in the basal ganglia, responsible for the interaction between two types of neurotransmitters, GABA and acetylcholine.

The study was recently published in Cell Reports.

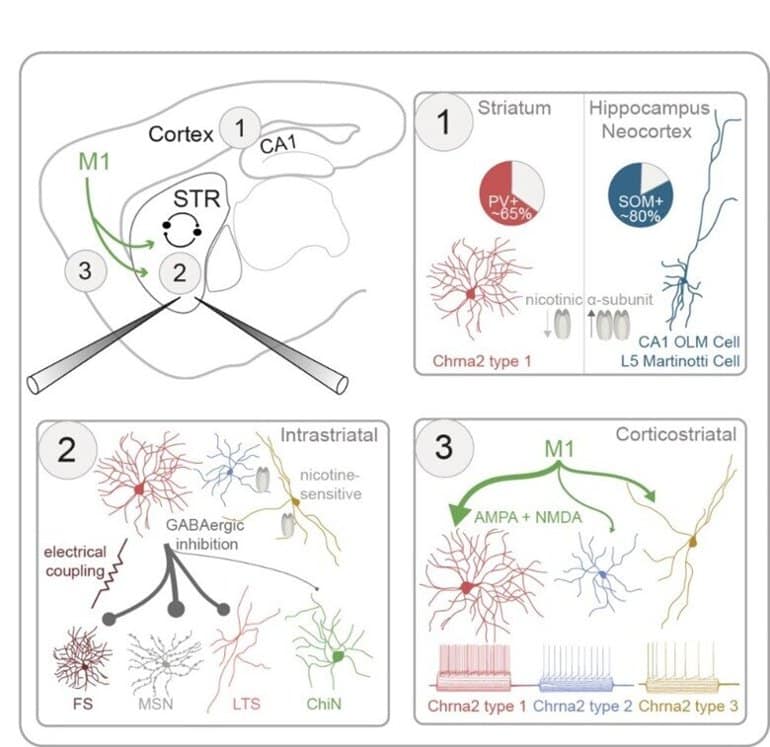

The striatum is the main input structure of the basal ganglia, a brain region involved in a variety of sensorimotor functions and reinforcement learning. 99% of striatal neurons are inhibitory GABAergic cells, and the only exception is the population of cholinergic interneurons.

“In previous studies we have showed the interactions between cholinergic interneurons and the dopamine system, and here we focused on the interactions between the cholinergic and GABAergic systems in the striatum,” explains Gilad Silberberg, Professor at the Department of Neuroscience, and main author of the study.

The striatum is strongly modulated by acetylcholine and early treatment for Parkinson’s disease was based on the cholinergic system. Cholinergic interneurons have been shown to change their activity in Parkinson’s disease, Huntington’s disease and in various forms of dyskinesia, all of which are disorders related to striatal function.

“Here we wanted to study how the cholinergic activity shapes striatal activity via nicotine receptors, a specific receptor of acetylcholine,” says Anna Tokarska, Ph.D. student in the Silberberg laboratory and first author of the study.

“To do that, we used transgenic mice, marking the striatal interneurons expressing these nicotinic receptors through the Chrna2 gene. We could then use various methods including patch-clamp and optogenetics, to characterize these neurons and their synaptic connectivity in the striatum,” she continues.

The striatal Chrna2 interneuron population was very diverse, including at least three main subpopulations with distinct anatomical and electrical properties. One population was of particular interest, showing novel characteristics including strong response to acetylcholine.

Future steps in this line of research will be to study this population in further detail, including its involvement in striatal function and dysfunction.

Abstract

GABAergic interneurons expressing the α2 nicotinic receptor subunit are functionally integrated in the striatal microcircuit

Highlights

- Triple whole-cell recordings are used to study striatal interneurons in Chrna2-Cre mice

- Unlike in other brain regions, most striatal Chrna2-interneurons express parvalbumin

- Three distinct subtypes of striatal Chrna2-interneurons are defined

- Their synaptic connectivity is mapped using optogenetics and patch-clamp recordings

Summary

The interactions between the striatal cholinergic and GABAergic systems are crucial in shaping reward-related behavior and reinforcement learning; however, the synaptic pathways mediating them are largely unknown.

Here, we use Chrna2-Cre mice to characterize striatal interneurons (INs) expressing the nicotinic α2 receptor subunit.

Using triple patch-clamp recordings combined with optogenetic stimulations, we characterize the electrophysiological, morphological, and synaptic properties of striatal Chrna2-INs.

Striatal Chrna2-INs have diverse electrophysiological properties, distinct from their counterparts in other brain regions, including the hippocampus and neocortex.

Unlike in other regions, most striatal Chrna2-INs are fast-spiking INs expressing parvalbumin. Striatal Chrna2-INs are intricately integrated in the striatal microcircuit, forming inhibitory synaptic connections with striatal projection neurons and INs, including other Chrna2-INs. They receive excitatory inputs from primary motor cortex mediated by both AMPA and NMDA receptors.

A subpopulation of Chrna2-INs responds to nicotinic input, suggesting reciprocal interactions between this GABAergic interneuron population and striatal cholinergic synapses.

Don’t blame the messenger: lessons learned for cancer mRNA vaccines during the COVID-19 pandemic

mRNA vaccines have proven safe and effective in preventing serious illness and death during the COVID-19 pandemic. These technologies offer a novel and intriguing approach towards personalizing immune-based treatments for patients with cancer — regardless of cancer type — with the potential for immune activation beyond commonly utilized immunotherapies.

Recently, one of us received an unexpected call from a longstanding patient with an advanced gastrointestinal cancer. “Dr. Morris,” she said, “I just saw a clip on [a national syndicated morning television show] about a clinical trial testing mRNA vaccines for cancers like mine. I would like more information on how I can participate.” Weeks earlier, with the SARS-CoV-2 Omicron variant surging through Houston, the majority of a clinic visit with the same person was spent discussing scientific inaccuracies that had thus far led to her decision not to receive a preventive mRNA vaccine against SARS-CoV-2.

Patients want the safest and best therapies and trust us clinicians to deliver them in the face of misinformation widespread in social media. Preventive mRNA vaccines have reduced serious illness and death prior to infection with SARS-CoV-2 (refs1,2). Does this technology have a role in oncology? Recent research suggests that therapeutic mRNA vaccines represent a novel and intriguing immune-based approach as a potential treatment for existing cancer. Lessons learned during the SARS-CoV-2 pandemic have provided practical insights as we seek to study and introduce these agents in clinical trials of our patients with cancer.

While cancer vaccines have been under investigation for decades, unique features of mRNA position mRNA vaccines as promising therapies. Following injection into the patient, lipophilic particles containing specified mRNA sequences of interest are taken up by antigen presenting cells, upon which mRNA transcripts are translated into the desired epitopes for immune recognition3. In the case of SARS-CoV-2, such constructs have been designed to target the spike protein of the virus particles, and early studies in oncology have successfully generated vaccines informed by tumour whole-exome sequencing to identify the most (bioinformatically predicted) immunogenic neoantigens — unique for each patient — as a personalized therapeutic vaccine4. Recognition of these engineered neoepitopes upon antigen presenting cell–helper (CD4+) T cell interactions triggers activation of cellular and humoral immunity alike. In contrast to immune checkpoint blockade therapies that target PD1, PDL1 or CTLA4 and that have revolutionized oncology over the past decade via modulation of T cell-mediated antitumour immunity5, mRNA vaccines are capable of attacking ‘non-self’ cancer cells via induction of T cells and B cells alike.

Understanding the fundamentals of mRNA biology may help us to dispel misconceptions regarding the safety of these vaccines as we evaluate their use as possible cancer treatments. There have been no substantiated occurrences of disruption and/or integration of mRNA into the host genome by vaccine nucleotides. Real-world experience from now hundreds of millions of people globally treated with approved mRNA vaccines against SARS-CoV-2 have substantiated the favourable safety and tolerability of this class of agents. These reassuring safety data for SARS-CoV-2 increase our confidence in the safety of therapeutic strategies for cancer as well.

While an intact, competent immune system may optimize efficacy of a vaccine, routine use of cytotoxic chemotherapy and subsequent myelosuppression presents a logistical challenge for patients undergoing cancer treatment. A recent prospective study compared immune responses with a SARS-CoV-2 mRNA vaccine between patients with solid tumours on cytotoxic, antineoplastic agents and participants without a cancer diagnosis6. Neutralizing antibodies against SARS-CoV-2 increased after sequential vaccine doses in patients with cancer. However, this group receiving cytotoxic chemotherapy, in contrast to the control participants, experienced less of an increase specifically in T cells following vaccine administration.

On the other hand, co-administration of CD19 or CD20 antibodies, which can deplete malignant B cell activity in the treatment of various haematological malignancies, may compromise mRNA vaccine-induced humoral (B cell) responses against their desired targets. In one study evaluating SARS-CoV-2 mRNA vaccination in patients with autoimmune disorders7, treatment with the CD20 antibody rituximab blunted the humoral response (with decreased production of neutralizing antibodies against SARS-CoV-2), but not the CD4+ or CD8+ T cell response. The time since last administration of rituximab correlated with the neutralizing antibody response to vaccination in this setting8. Therefore, a longer duration between receipt of mRNA vaccines and start of treatment with agents targeting malignant B cells may increase the likelihood for vaccine efficacy. Collectively, these studies provide important insight into the effect of cancer and systemic myelosuppressive therapies on cellular and humoral immune responses to mRNA vaccines. In the future, timing of therapeutic mRNA vaccines should be informed not only by the type of preceding antineoplastic therapy but also by the temporal relationship between the treatments and vaccination.

Oncology is poised to benefit from the rapid scalability and dissemination of mRNA vaccines, as we have witnessed during the SARS-CoV-2 pandemic. With mRNA vaccines, immunological responses may be evoked regardless of the human leukocyte antigen (HLA) type for immune presentation9. Many clinical trials using DNA vaccines or peptide vaccines in the treatment of cancer limit the HLA types for which participants may be eligible because of the need to bind to prespecified HLA types. Implicitly, this feature introduces the potential for disparate access to emerging therapies owing to demographic differences in HLA prevalence. This facet bodes well for the development of mRNA vaccines as potential therapeutic tools in oncology that prioritize health equity and less restricted access to promising new agents.

Amid the experiential knowledge gained of mRNA vaccines over the past several years, the lessons learned between immunologists/virologists and oncologists may be bidirectional. Through the incorporation of whole-exome sequencing of resected tumours into the generation of personalized mRNA vaccines, turnaround time back to the patient has improved to under 2 months — a remarkable accomplishment for an N = 1 therapy. The pressing need to treat patients with cancer rapidly in clinical trials has driven streamlining and efficiencies in mRNA vaccine generation, which may have broader benefits. Of great global concern throughout the recent pandemic has been the emergence of the new SARS-CoV-2 variants, with others expected to follow. While mRNA vaccines have continued to prove effective thus far in providing necessary protection against serious illness and death (even against the newer variants), an understandable concern is raised regarding preservation of such immunity by currently available vaccines against evolved strains in the future. Here, as illustrated by tumour-informed therapeutic vaccines, the ability to recapitulate rapid development of updated mRNA vaccines, based upon genomic sequences of new, unfamiliar viral variants, is reassuring as we consider the long-term implications of extended prevention against an evolving and long-term SARS-CoV-2 threat.

In early 2020, the term ‘COVID-19’ existed peripherally on our awareness as clinicians and as citizens. Since then, multiple preventative vaccines (mRNA among others) have not only been created but have also moved expeditiously through otherwise laborious regulatory approval for rapid dissemination globally. Here, fields of industry, science, business and government have collaborated in an unprecedented manner for the welfare of the public. In doing so, millions of lives have been saved because of mRNA vaccines. Immeasurable morbidity on patients and on the burden of a health-care system has been spared. However, in 2022, cancer remains the second leading cause of overall mortality in the United States10. When faced with this crisis of cancer, we recognize that new treatments are warranted for development and distribution at larger scales, and the pipeline from development to approval of effective therapies in oncology remains cumbersome. Our patients with cancer deserve faster delivery of new options that improve their outcomes, and the efficient response observed with mRNA vaccines during the SARS-CoV-2 pandemic serves as a model template for future endeavors. We should be encouraged by this and must focus together on the scientific process and promise of such technologies for cancer drug development, with a goal of improving the lives of the patients sitting across the exam room from us today.

Gastrointestinal symptoms and fecal shedding of SARS-CoV-2 RNA suggest prolonged gastrointestinal infection

Highlights

- •Approximately one-half of COVID-19 patients shed fecal RNA in the week after diagnosis

- •Four percent of patients with COVID-19 shed fecal viral RNA 7 months after diagnosis

- •Presence of fecal SARS-CoV-2 RNA is associated with gastrointestinal symptoms

- •SARS-CoV-2 likely infects gastrointestinal tissue

Context and significance

Gastrointestinal symptoms and SARS-CoV-2 RNA shedding in feces point to the gastrointestinal tract as a possible site of infection in COVID-19. Researchers from Stanford University measured the dynamics of fecal viral RNA in patients with mild to moderate COVID-19 followed for 10 months post-diagnosis. The authors found that fecal viral RNA shedding was correlated with gastrointestinal symptoms in patients who had cleared their respiratory infection. They also observed that fecal shedding can continue to 7 months post-diagnosis. In conjunction with recent related findings, this work presents compelling evidence of SARS-CoV-2 infection in the gastrointestinal tract and suggests a possible role for long-term infection of the gastrointestinal tract in syndromes such as “long COVID.”

Summary

Background

COVID-19 manifests with respiratory, systemic, and gastrointestinal (GI) symptoms.

, SARS-CoV-2 RNA is detected in respiratory and fecal samples, and recent reports demonstrate viral replication in both the lung and intestinal tissue.

Although much is known about early fecal RNA shedding, little is known about long-term shedding, especially in those with mild COVID-19. Furthermore, most reports of fecal RNA shedding do not correlate these findings with GI symptoms.

5Methods

We analyzed the dynamics of fecal RNA shedding up to 10 months after COVID-19 diagnosis in 113 individuals with mild to moderate disease. We also correlated shedding with disease symptoms.

Findings

Fecal SARS-CoV-2 RNA is detected in 49.2% [95% confidence interval, 38.2%–60.3%] of participants within the first week after diagnosis. Whereas there was no ongoing oropharyngeal SARS-CoV-2 RNA shedding in subjects at 4 months, 12.7% [8.5%–18.4%] of participants continued to shed SARS-CoV-2 RNA in the feces at 4 months after diagnosis and 3.8% [2.0%–7.3%] shed at 7 months. Finally, we found that GI symptoms (abdominal pain, nausea, vomiting) are associated with fecal shedding of SARS-CoV-2 RNA.

Conclusions

The extended presence of viral RNA in feces, but not in respiratory samples, along with the association of fecal viral RNA shedding with GI symptoms suggest that SARS-CoV-2 infects the GI tract and that this infection can be prolonged in a subset of individuals with COVID-19.

Graphical abstract

Evidence of Ongoing Gut Infection with SARS-CoV-2 in Some Patients

During moderate-to-severe acute SARS-CoV-2 infection, viral RNA is shed in feces. Viral RNA and antigens, and even live virus, have been demonstrated in gastrointestinal (GI) biopsies. The virus also can infect intestinal cell lines.

In a study from Stanford University, researchers followed 113 people (median age, 36) for as long as 10 months after diagnoses of mild-to-moderate COVID-19; 673 fecal samples were collected at prespecified times during follow-up. Almost half (49%) of fecal samples collected during the first week after diagnoses contained viral RNA. By 7 months after diagnoses, 4% of fecal samples contained viral RNA. GI shedding of viral RNA continued long after virus could no longer be detected in the oropharynx. Ongoing shedding of viral RNA correlated significantly with GI symptoms (e.g., nausea, abdominal pain, vomiting) and non-GI symptoms (e.g., rhinorrhea, headaches, myalgias).

COMMENT

In some people with mild-to-moderate COVID-19, a long-term infection of the gut can occur, with ongoing shedding of viral RNA that can be associated with GI and non-GI symptoms. The authors speculate that a continued reservoir of infection in the immunologically active intestinal mucosa could trigger chronic inflammation which, in turn, might be a cause of “long COVID” symptoms.

Vitamin D analog does not affect incidence rates of type 2 diabetes in adults

Eldecalcitol, a vitamin D analog, is not associated with a reduced incidence of type 2 diabetes among adults with prediabetes, though it may have a beneficial effect in those with lower insulin secretion, according to study data.

Tetsuya Kawahara

“We were surprised at the findings because our pilot study showed eldecalcitol’s preventive effect on the development of type 2 diabetes,” Tetsuya Kawahara, MD, PhD, of the University of Occupational and Environment Health and Shin Komonji Hospital in Kitakyushu, Japan, told Healio. “We think that these findings are a result of lack of statistical power, an unbalanced distribution of 2-hour plasma glucose concentrations between participants in the eldecalcitol and placebo groups, or both. Treatment with eldecalcitol was effective in increasing bone mineral densities and serum osteocalcin concentrations.”

Kawahara and colleagues conducted a randomized, double-blind, placebo-controlled trial in 1,256 adults aged 30 years or older with impaired glucose tolerance in Japan (45.5% women; mean age, 61.3 years). Participants were randomly assigned to 0.75 g of eldecalcitol daily (n = 630) or placebo (n = 626) for 3 years. Study visits took place at 3-month intervals and included a routine clinical exam, a 75 g oral glucose tolerance test, collection of serum 25-hydroxyvitamin D and 1,25-dihydroxyvitamin D levels and a measurement of BMD. The primary endpoint was the development of type 2 diabetes from baseline to 3 years.

The findings were published in The BMJ.

During the follow-up period, 79 adults in the eldecalcitol group and 89 in the placebo group developed diabetes. There was no significant difference in diabetes incidence between the two groups. There was also no difference in the percentage of participants achieving normoglycemia.

Eldecalcitol lowers diabetes risk in adults with low insulin secretion

In post hoc analysis, participants were divided into tertiles based on homoeostasis model assessment beta-cell function, HOMA of insulin resistance and fasting immunoreactive insulin levels. Adults in the lowest tertiles for all three metrics taking eldecalcitol had a lower risk for developing type 2 diabetes compared with placebo.

“Although treatment with eldecalcitol did not significantly reduce the incidence of diabetes among people with prediabetes, the results suggested the potential for a beneficial effect of eldecalcitol on people with insufficient insulin secretion,” Kawahara said.

Serum 1,25-dihydroxyvitamin D and bone alkaline phosphate levels were significantly lower in the eldecalcitol group compared with placebo, and those taking eldecalcitol had higher BMD of the lumbar spine and femoral neck than those taking placebo

Larger intervention studies needed

In a related editorial, Tatiana Christides, MD, PhD, senior lecturer in medical sciences at Barts and the London School of Medicine and Dentistry, Queen Mary University in London, fellow of the Royal Society of Medicine and president emeritus of the Royal Society of Medicine Food and Health Forum, said she was not surprised that there was no difference between the two groups in diabetes incidence rates, though the findings were still disappointing.

“We have now seen, for multiple medical conditions, that clinical intervention studies looking for an effect of vitamin D supplementation on disease do not support epidemiological evidence showing an association of low vitamin D status with the disease,” Christides told Healio. “I think vitamin D is the ‘poster child’ for making people aware that association does not mean causation.”

Both Christides and Kawahara noted the study was underpowered to detect the small difference in type 2 diabetes groups. Additionally, Christides said the study findings may differ in other populations and lack data on the degree of vitamin D deficiency in participants.

“What we now need are larger intervention studies well powered to detect a small, but clinically meaningful, decrease in progression risk, carried out in younger and diverse populations,” Christides said. “We also need to know if vitamin D status including degree of deficiency interacts with supplementation response to progression risk.”

Gestational diabetes combined with overweight or obesity increases severe COVID-19 risk

Pregnant women with gestational diabetes, combined with periconceptional overweight or obesity, had an increased risk for severe COVID-19, according to results of a prospective observational study.

This was especially prevalent among pregnant women who required insulin therapy, data from the COVID-19 Obstetric and Neonatal Outcome Study (CRONOS) showed.

“Most important for clinicians to know is that not only pre-existing diabetes, but also gestational diabetes is a risk factor for severe COVID-19, especially when it occurs in overweight individuals,” Ulrich Pecks, MD, a professor in the department of obstetrics and gynecology at the University Hospital Schleswig-Holstein in Kiel, Germany, told Healio. “Presumably, the severity of the diabetic metabolic condition also plays a role.”

While it is known that gestational diabetes mellitus (GDM) is a common pregnancy complication — global prevalence was 13.4% in 2021 — research on the association of GDM and maternal and neonatal pregnancy outcomes among pregnant women infected with SARS-CoV-2 are lacking.

Pecks and colleagues conducted an analysis of 1,490 women (mean age, 31 ± 5.2 years; 40.7% nulliparous) who had COVID-19 between April 3, 2020, and Aug. 24, 2021, none of whom were vaccinated, according to Pecks.

“A general recommendation for vaccination during pregnancy was not issued in Germany until September 2021 … unfortunately, vaccination rates among pregnant women are still low at about 40%,” he said.

Maternal outcomes

Overall, results showed that GDM was not associated with adverse maternal outcomes (OR = 1.5; 95% CI, 0.88-2.57). However, women with overweight or obesity and GDM were at an increased risk for adverse maternal outcomes (adjusted OR = 2.69; 95% CI, 1.43-5.07). Women with GDM who had overweight or obesity and required insulin therapy were at an even greater risk for a severe course of COVID-19 (aOR = 3.05; 95% CI, 1.38-6.73).

“According to our analyses, BMI and a diabetic metabolic condition play the largest roles, ahead of maternal age and other pre-existing conditions such as asthma or hypertension,” Pecks said. “The only factor more important is the gestational week of infection. Women in the third trimester have a significantly higher risk of a severe course than women in the first trimester.”

Pecks mentioned that the virus variant does play a role.

“With omicron, we see much less severe courses than with the previous variants, but they sometimes occur, and we observe a positive effect from vaccination here as well,” he said. “It cannot be emphasized often enough how valuable vaccination is against COVID-19. I have seen and cared for several women suffering from COVID-19, all of them unvaccinated. The great fear of affected women who came into the clinic because of breathing difficulties was terrible.”

Neonatal outcomes

The analyses also showed that maternal GDM and maternal pre-conceptional overweight or obesity increased the risk for adverse fetal and neonatal outcomes (aOR = 1.83; 95% CI, 1.05-3.18). Regardless of GDM status, having overweight or obesity were influential factors in maternal (aOR = 1.87; 95% CI, 1.26-2.75) and neonatal outcomes (aOR = 1.81; 95% CI, 1.32-2.48) compared with having underweight or normal weight.

A vast majority of newborns experienced little to no effect from their mothers’ COVID-19 disease, Pecks noted.

“Here, we can fortunately reassure the affected women. However, there are secondary phenomena that can affect the newborn, such as premature birth due to COVID-19,” Pecks said. “We also confirm with our study that gestational diabetes mellitus is a serious risk for the pregnancy and the fetus, but fortunately can be easily recognized and treated.”

PERSPECTIVE

The paper by Pecks and colleagues reporting on the results of CRONOS reaffirms many of the known risk factors for more severe cases of COVID-19 and shows that — in combination — these risk factors can lead to more serious outcomes. We have known that pregnancy, obesity and diabetes individually contribute to more severe outcomes in COVID-19 patients.

Indeed, one of the first severe complications for pregnant women with COVID-19 that was noted was a presentation of a severe preeclampsia pattern. We certainly see preeclampsia more frequently in women with overweight and obesity who have GDM.

So, what shall we take away from this study? There is still a significant resistance among women who are contemplating pregnancy or are pregnant to receive COVID-19 vaccinations. Data like these reaffirm the potential consequences of COVID-19 in pregnancy, and we can explain these data to our patients to encourage vaccination. We can also use these data to encourage our patients with overweight or obesity to lose weight prepregnancy to minimize their risks, which can be particularly helpful to our patients who are still trying to wait out the pandemic before becoming pregnant.

Mary Jane Minkin, MD

Clinical professor of obstetrics and gynecology, Yale School of Medicine

Healio Women’s Health & OB/GYN Peer Perspective Board Member

With a little ‘love,’ health system culture can be changed for the better

Several years ago, our health care system developed core values and behaviors from the grassroots level up. This was the brainchild of our president and CEO, Janice E. Nevin, MD, MPH, who is a family medicine physician.

All caregivers from all levels of the organization participated and provided input.

The theme that developed was, “We serve together guided by our values of excellence and love.” That’s right, the word love.

I had a hard time putting my head around love in the medical environment. I have no problems loving my nuclear and extended family, some of my friends and colleagues, but I had a hard time fitting “love” into the patient arena.

‘Ti amo bello’

It seems strange to me, even though I was brought up in an Italian family where love was rampant, involving hugs and kisses all around. When first-time visitors entered our home and had the standard 6-hour Italian meal, the greatest joy my mother had was seeing everybody finish everything she had cooked. Upon leaving, she would hug every one of them. That was also true of my uncles and aunts, whose parting words to me after every visit were, “Ti amo bello.”

I discussed my difficulty wrapping myself around the word with our chief medical officer. His recommendation was to not think about love but rather compassion. Bazinga! Immediately a lightbulb went off in my head. Problem solved.

These values of love and excellence from the compassion perspective make sense to me. Under the category of love, our health system crafted practical statements, such as:

- We embrace diversity and show respect to everyone.

- We tell the truth with courage and empathy.

- We listen actively, seek to understand and assume good intentions.

The excellence category includes these statements:

- We use resources wisely and effectively.

- We seek new knowledge, ask for feedback and are open to change.

- We are true to our word and follow through on our commitments.

These are just some examples. Pretty simple, but very powerful.

A remarkable impact

The impact of these has been remarkable over the last 8 years. I have seen the culture on campus change because of these values and behaviors. An environment of psychological safety has been created in many areas of the health system.

Importantly, these values are not just listed on paper and hung on our walls. They are practiced every day and called out at every meeting. You can’t have a conversation without bringing up any of the core values or behaviors.

It may surprise those of you who know me as a shoot-from-the-hip New Yorker that I have embraced and adapted to the behaviors. Even my wife has noticed a change in our home environment. Well, let’s say not as a dramatic a change as in my work environment.

I don’t know if this dramatic culture change has occurred at other institutions, or if something similar even exists. If it doesn’t, I encourage you to develop a grassroots approach in your organization to develop such values and behaviors.

Patient satisfaction has also skyrocketed in many areas of the health system because of this change in culture. However, there’s still a lot more to do, as everyone knows that culture doesn’t change overnight.

I’d be happy to share more of these core values and behaviors with you, so don’t hesitate to send me an email at the address below. You and your colleagues will work in a better environment and, just as importantly, your patients with cancer will benefit tremendously. As we all know, life is short. Give yourself the benefit of the doubt.

Ciao. Stay safe.

For more information:

Nicholas J. Petrelli, MD, FACS, is Bank of America endowed medical director of ChristianaCare’s Helen F. Graham Cancer Center & Research Institute and associate director of translational research at Wistar Cancer Institute. He also serves as Associate Editor of Surgical Oncology for HemOnc Today. He can be reached at npetrelli@christianacare.org.