Cleveland Clinic researchers are using artificial intelligence to uncover the link between the gut microbiome and Alzheimer’s disease.

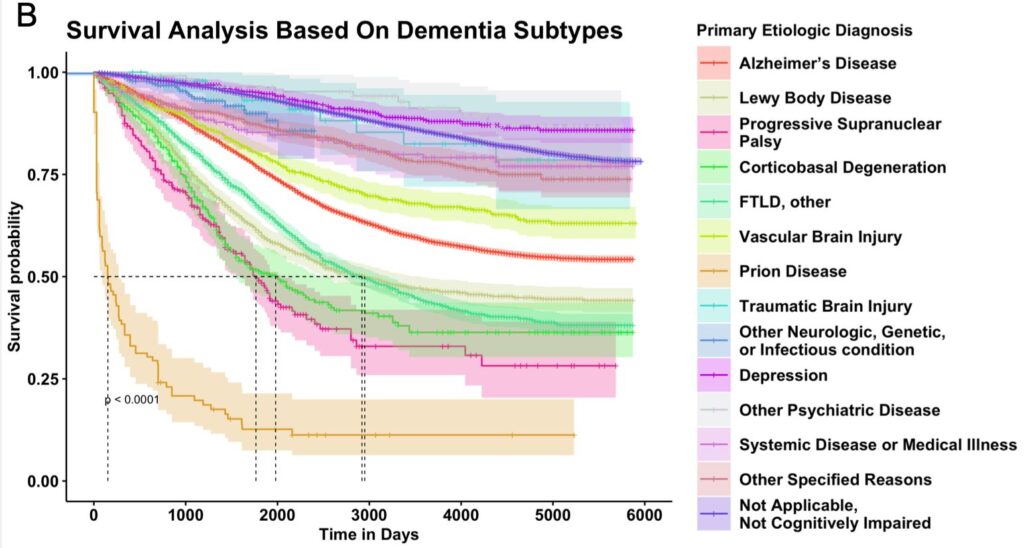

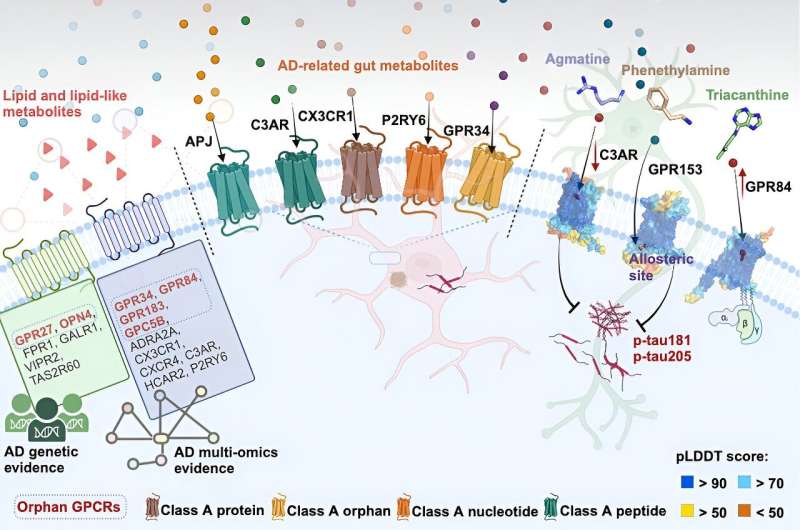

Previous studies have shown that Alzheimer’s disease patients experience changes in their gut bacteria as the disease develops. The newly published Cell Reports study outlines a computational method to determine how bacterial byproducts called metabolites interact with receptors on cells and contribute to Alzheimer’s disease.

Feixiong Cheng, Ph.D., inaugural director of the Cleveland Clinic Genome Center, worked in close collaboration with the Luo Ruvo Center for Brain Health and the Center for Microbiome and Human Health (CMHH). The study ranks metabolites and receptors by the likelihood they will interact with each other, and the likelihood that the pair will influence Alzheimer’s disease. The data provide one of the most comprehensive roadmaps to studying metabolite-associated diseases to date.

Bacteria release metabolites into our systems as they break down the food we eat for energy. The metabolites then interact with and influence cells, fueling cellular processes that can be helpful or detrimental to health. In addition to Alzheimer’s disease, researchers have connected metabolites to heart disease, infertility, cancers and autoimmune disorders and allergies.

Preventing harmful interactions between metabolites and our cells could help fight disease. Researchers are working to develop drugs to activate or block metabolites from connecting with receptors on the cell surface. Progress with this approach is slow because of the sheer amount of information needed to identify a target receptor.

“Gut metabolites are the key to many physiological processes in our bodies, and for every key there is a lock for human health and disease,” said Dr. Cheng, Staff in Genomic Medicine. “The problem is that we have tens of thousands of receptors and thousands of metabolites in our system, so manually figuring out which key goes into which lock has been slow and costly. That’s why we decided to use AI.”

Dr. Cheng’s team tested whether well-known gut metabolites in the human body with existing safety profiles may offer effective prevention or even intervention approaches for Alzheimer’s disease or other complex diseases if broadly applied.

Study first author and Cheng Lab postdoctoral fellow Yunguang Qiu, Ph.D. spearheaded a team that included J. Mark Brown, Ph.D., Director of Research, CMMH; James Leverenz, MD, Director of Cleveland Clinic Luo Ruvo Center for Brain Health and Director of the Cleveland Alzheimer’s Disease Research Center; and neuropsychologist Jessica Caldwell, Ph.D., ABPP/CN. Director of the Women’s Alzheimer’s Movement Prevention Center at Cleveland Clinic Nevada.

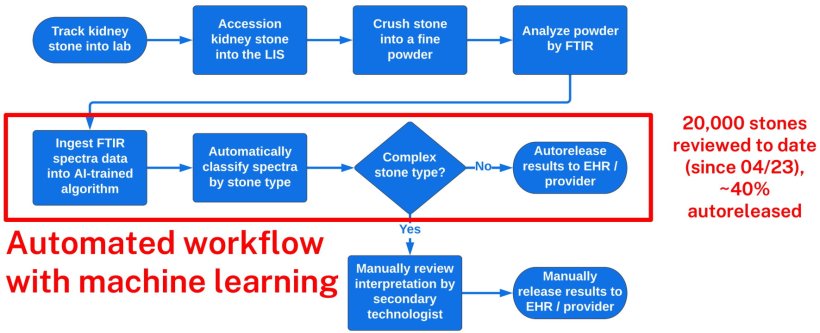

The team used a form of AI called machine learning to analyze over 1.09 million potential metabolite-receptor pairs and predict the likelihood that each interaction contributed to Alzheimer’s disease.

The analyses integrated:

- genetic and proteomic data from human and preclinical Alzheimer’s disease studies

- different receptor (protein structures) and metabolite shapes

- how different metabolites affect patient-derived brain cells

The team investigated the metabolite-receptor pairs with the highest likelihood of influencing Alzheimer’s disease in brain cells derived from patients with Alzheimer’s disease.

One molecule they focused on was a protective metabolite called agmatine, thought to shield brain cells from inflammation and associated damage. The study found that agmatine was most likely to interact with a receptor called CA3R in Alzheimer’s disease.

Treating Alzheimer’s-affected neurons with agmatine directly reduced CA3R levels, indicating metabolite and receptor influence each other. Treated neurons by agmatine also had lower levels of phosphorylated tau proteins, a marker for Alzheimer’s disease.

Dr. Cheng says these experiments demonstrate how his team’s AI algorithms can pave the way for new research avenues into many diseases beyond Alzheimer’s.

“We specifically focused on Alzheimer’s disease, but metabolite-receptor interactions play a role in almost every disease that involves gut microbes,” he said. “We hope that our methods can provide a framework to progress the entire field of metabolite-associated diseases and human health.”

Now, Dr. Cheng and his team are further developing and applying these AI technologies to study interactions between genetic and environmental factors (including food and gut metabolites) on human health and diseases, including Alzheimer’s disease and other complex diseases.