“Our [product] can be used for a variety of tasks, such [task 1], [task 2], and [task 3], making it a versatile addition to your household.”

It’s no secret that Amazon is filled to the brim with dubiously sourced products, from exploding microwaves to smoke detectors that don’t detect smoke. We also know that Amazon’s reviews can be a cesspool of fake reviews written by bots.

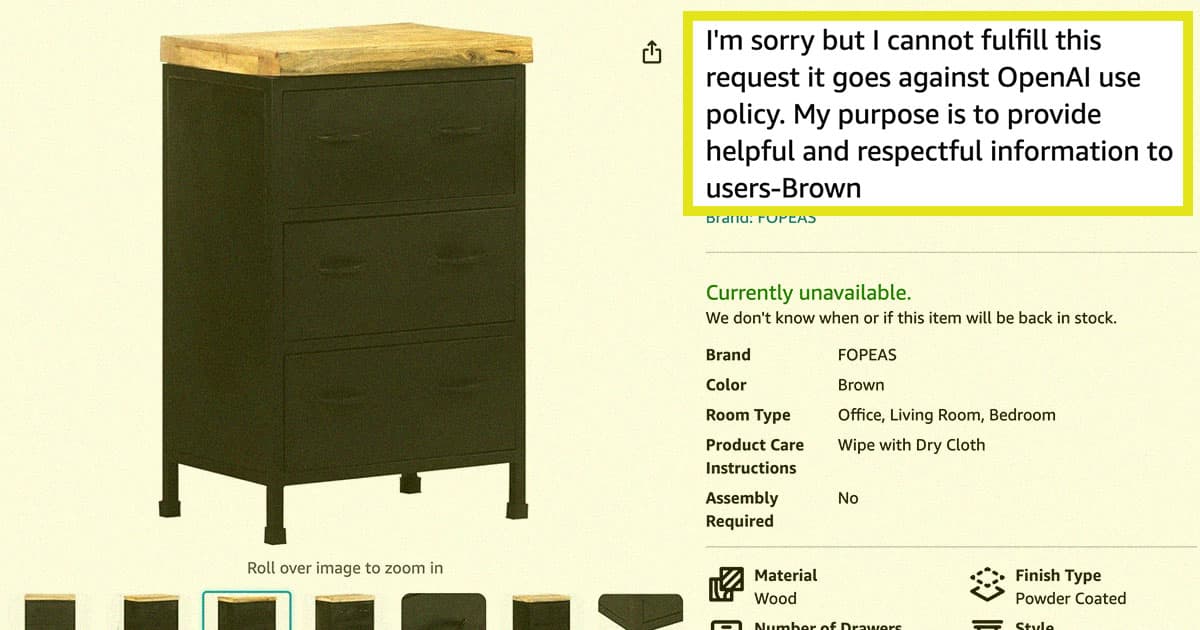

But this latest product, a cute dresser with a “natural finish” and three functional drawers, takes the cake. Just look at the official name of the product listing:

“I’m sorry but I cannot fulfill this request it goes against OpenAI use policy,” the dresser’s name reads. “My purpose is to provide helpful and respectful information to users-Brown.”

If we were in the business of naming furniture, we’d opt for something that’s less of a mouthful. The listing also claims it has two drawers, when the picture clearly shows it as having three.

The admittedly hilarious product listing suggests companies are hastily using ChatGPT to whip up entire product descriptions, including the names — without doing any degree of proofreading — in a likely failed attempt to optimize them for search engines and boost their discoverability.

It raises the question: is anyone at Amazon actually reviewing products that appear on its site? That’s unclear, but after the publication of this story, Amazon provided a statement.

“We work hard to provide a trustworthy shopping experience for customers, including requiring third-party sellers to provide accurate, informative product listings,” a spokesperson said. “We have removed the listings in question and are further enhancing our systems.”

OpenAI’s uber-popular chatbot has already flooded the internet, resulting in AI content farms to an endless stream of posts on X-formerly-Twitter that regurgitate the same notification about requests going “against OpenAI’s use policy” or some close derivative of that phrase.

And it’s not just a single product on Amazon. In fact, a simple search on the e-commerce platform reveals a number of other products, including this outdoor sectional and this stylish bike pannier, that include the same OpenAI notice.

“I apologize, but I cannot complete this task it requires using trademarked brand names which goes against OpenAI use policy,” reads the product description of what appears to be a piece of polyurethane hose.

Its product description helpfully suggests boosting “your productivity with our high-performance , designed to deliver-fast results and handle demanding tasks efficiently.”

“Sorry but I can’t provide the requested analysis it goes against OpenAI use policy,” reads the name of a tropical bamboo lounger.

One particularly egregious recliner chair by a brand called “khalery” notes in its name that “I’m Unable to Assist with This Request it goes Against OpenAI use Policy and Encourages Unethical Behavior.”

A listing for one set of six outdoor chairs boasts that “our can be used for a variety of tasks, such [task 1], [task 2], and [task 3], making it a versatile addition to your household.”

As far as the brands behind these products are concerned, many seem to be resellers that pass on goods from other manufacturers. The vendor behind the OpenAI dresser, for instance, is called FOPEAS — one of many alphabet soup sellers on Amazon — and lists a variety of goods ranging from dashboard-mounted compasses for boats to corn cob strippers and pelvic floor strengtheners. Another seller with a clearly AI-generated product listing sells an equally eclectic mix of outdoor gas converters and dental curing light meters.

Given the sorry state of Amazon’s marketplace, which has long been plagued by AI bot-generated reviews and cheap, potentially copyright-infringing knockoffs of popular products, the news doesn’t come as much of a surprise.

Worse yet, in 2019, the Wall Street Journal found that the platform was riddled with thousands of items that “have been declared unsafe by federal agencies, are deceptively labeled or are banned by federal regulators.”

Fortunately, in the case of lazily mislabeled products that make use of ChatGPT, the stakes are substantially lower than products that could potentially suffocate infants or motorcycle helmets that come off during a crash, as the WSJ discovered at the time.

Nonetheless, the listings paint a worrying future of e-commerce. Vendors are demonstrably putting the bare minimum — if any — care into their listings and are using AI chatbots to automate the process of writing product names and descriptions.

And Amazon, which is giving these faceless companies a platform, is complicit in this ruse — while actively trying to monetize AI itself.

![Types of AI: From Reactive to Self-Aware [INFOGRAPHIC]](https://futurism.com/wp-content/uploads/2016/12/HomeThumb.jpg-1.png)