http://www.theearthchild.co.za/quantum-theory-consciousness-moves-to-another-universe-after-death-1/

Month: February 2017

EPA joins FDA to approve 3 types of genetically modified potatoes.

How Automation is Going to Redefine What it Means to Work

On December 2nd, 1942, a team of scientists led by Enrico Fermi came back from lunch and watched as humanity created the first self-sustaining nuclear reaction inside a pile of bricks and wood underneath a football field at the University of Chicago. Known to history as Chicago Pile-1, it was celebrated in silence with a single bottle of Chianti, for those who were there understood exactly what it meant for humankind, without any need for words.

Now, something new has occurred that, again, quietly changed the world forever. Like a whispered word in a foreign language, it was quiet in that you may have heard it, but its full meaning may not have been comprehended. However, it’s vital we understand this new language, and what it’s increasingly telling us, for the ramifications are set to alter everything we take for granted about the way our globalized economy functions, and the ways in which we as humans exist within it.

The language is a new class of machine learning known as deep learning, and the “whispered word” was a computer’s use of it to seemingly out of nowhere defeat three-time European Go champion Fan Hui, not once but five times in a row without defeat. Many who read this news, considered that as impressive, but in no way comparable to a match against Lee Se-dol instead, who many consider to be one of the world’s best living Go players, if not the best. Imagining such a grand duel of man versus machine, China’s top Go player predicted that Lee would not lose a single game, and Lee himself confidently expected to possibly lose one at the most.

What actually ended up happening when they faced off? Lee went on to lose all but one of their match’s five games. An AI named AlphaGo is now a better Go player than any human and has been granted the “divine” rank of 9 dan. In other words, its level of play borders on godlike. Go has officially fallen to machines, just as Jeopardy did before it to Watson, and chess before that to Deep Blue.

“AlphaGo’s historic victory is a clear signal that we’ve gone from linear to parabolic.”

So, what is Go? Very simply, think of Go as Super Ultra Mega Chess. This may still sound like a small accomplishment, another feather in the cap of machines as they continue to prove themselves superior in the fun games we play, but it is no small accomplishment, and what’s happening is no game.

AlphaGo’s historic victory is a clear signal that we’ve gone from linear to parabolic. Advances in technology are now so visibly exponential in nature that we can expect to see a lot more milestones being crossed long before we would otherwise expect. These exponential advances, most notably in forms of artificial intelligence limited to specific tasks, we are entirely unprepared for as long as we continue to insist upon employment as our primary source of income.

This may all sound like exaggeration, so let’s take a few decade steps back, and look at what computer technology has been actively doing to human employment so far:

Let the above chart sink in. Do not be fooled into thinking this conversation about the automation of labor is set in the future. It’s already here. Computer technology is already eating jobs and has been since 1990.

ROUTINE WORK

All work can be divided into four types: routine and nonroutine, cognitive and manual. Routine work is the same stuff day in and day out, while nonroutine work varies. Within these two varieties, is the work that requires mostly our brains (cognitive) and the work that requires mostly our bodies (manual). Where once all four types saw growth, the stuff that is routine stagnated back in 1990. This happened because routine labor is easiest for technology to shoulder. Rules can be written for work that doesn’t change, and that work can be better handled by machines.

Distressingly, it’s exactly routine work that once formed the basis of the American middle class. It’s routine manual work that Henry Ford transformed by paying people middle class wages to perform, and it’s routine cognitive work that once filled US office spaces. Such jobs are now increasingly unavailable, leaving only two kinds of jobs with rosy outlooks: jobs that require so little thought, we pay people little to do them, and jobs that require so much thought, we pay people well to do them.

If we can now imagine our economy as a plane with four engines, where it can still fly on only two of them as long as they both keep roaring, we can avoid concerning ourselves with crashing. But what happens when our two remaining engines also fail? That’s what the advancing fields of robotics and AI represent to those final two engines, because for the first time, we are successfully teaching machines to learn.

NEURAL NETWORKS

I’m a writer at heart, but my educational background happens to be in psychology and physics. I’m fascinated by both of them so my undergraduate focus ended up being in the physics of the human brain, otherwise known as cognitive neuroscience. I think once you start to look into how the human brain works, how our mass of interconnected neurons somehow results in what we describe as the mind, everything changes. At least it did for me.

As a quick primer in the way our brains function, they’re a giant network of interconnected cells. Some of these connections are short, and some are long. Some cells are only connected to one other, and some are connected to many. Electrical signals then pass through these connections, at various rates, and subsequent neural firings happen in turn. It’s all kind of like falling dominoes, but far faster, larger, and more complex. The result amazingly is us, and what we’ve been learning about how we work, we’ve now begun applying to the way machines work.

One of these applications is the creation of deep neural networks – kind of like pared-down virtual brains. They provide an avenue to machine learning that’s made incredible leaps that were previously thought to be much further down the road, if even possible at all. How? It’s not just the obvious growing capability of our computers and our expanding knowledge in the neurosciences, but the vastly growing expanse of our collective data, aka big data.

BIG DATA

Big data isn’t just some buzzword. It’s information, and when it comes to information, we’re creating more and more of it every day. In fact we’re creating so much that a 2013 report by SINTEF estimated that 90% of all information in the world had been created in the prior two years. This incredible rate of data creation is even doubling every 1.5 years thanks to the Internet, where in 2015 every minute we were liking 4.2 million things on Facebook, uploading 300 hours of video to YouTube, and sending 350,000 tweets. Everything we do is generating data like never before, and lots of data is exactly what machines need in order to learn to learn. Why?

Imagine programming a computer to recognize a chair. You’d need to enter a ton of instructions, and the result would still be a program detecting chairs that aren’t, and not detecting chairs that are. So how did we learn to detect chairs? Our parents pointed at a chair and said, “chair.” Then we thought we had that whole chair thing all figured out, so we pointed at a table and said “chair”, which is when our parents told us that was “table.” This is called reinforcement learning. The label “chair” gets connected to every chair we see, such that certain neural pathways are weighted and others aren’t. For “chair” to fire in our brains, what we perceive has to be close enough to our previous chair encounters. Essentially, our lives are big data filtered through our brains.

DEEP LEARNING

The power of deep learning is that it’s a way of using massive amounts of data to get machines to operate more like we do without giving them explicit instructions. Instead of describing “chairness” to a computer, we instead just plug it into the Internet and feed it millions of pictures of chairs. It can then have a general idea of “chairness.” Next we test it with even more images. Where it’s wrong, we correct it, which further improves its “chairness” detection. Repetition of this process results in a computer that knows what a chair is when it sees it, for the most part as well as we can. The important difference though is that unlike us, it can then sort through millions of images within a matter of seconds.

This combination of deep learning and big data has resulted in astounding accomplishments just in the past year. Aside from the incredible accomplishment of AlphaGo, Google’s DeepMind AI learned how to read and comprehend what it read through hundreds of thousands of annotated news articles. DeepMind alsotaught itself to play dozens of Atari 2600 video games better than humans, just by looking at the screen and its score, and playing games repeatedly. An AI named Giraffe taught itself how to play chess in a similar manner using a dataset of 175 million chess positions, attaining International Master level status in just 72 hours by repeatedly playing itself. In 2015, an AI even passed a visual Turing test by learning to learn in a way that enabled it to be shown an unknown character in a fictional alphabet, then instantly reproduce that letter in a way that was entirely indistinguishable from a human given the same task. These are all major milestones in AI.

However, despite all these milestones, when asked to estimate when a computer would defeat a prominent Go player, the answer even just months prior to the announcement by Google of AlphaGo’s victory, was by experts essentially, “Maybe in another ten years.” A decade was considered a fair guess because Go is a game so complex I’ll just let Ken Jennings of Jeopardy fame, another former champion human defeated by AI, describe it:

Go is famously a more complex game than chess, with its larger board, longer games, and many more pieces. Google’s DeepMind artificial intelligence team likes to say that there are more possible Go boards than atoms in the known universe, but that vastly understates the computational problem. There are about 10¹⁷⁰ board positions in Go, and only 10⁸⁰ atoms in the universe. That means that if there were as many parallel universes as there are atoms in our universe (!), then the totalnumber of atoms in all those universes combined would be close to the possibilities on a single Go board.

Such confounding complexity makes impossible any brute-force approach to scan every possible move to determine the next best move. But deep neural networks get around that barrier in the same way our own minds do, by learning to estimate what feels like the best move. We do this through observation and practice, and so did AlphaGo, by analyzing millions of professional games and playing itself millions of times. So the answer to when the game of Go would fall to machines wasn’t even close to ten years. The correct answer ended up being, “Any time now.”

NONROUTINE AUTOMATION

Any time now. That’s the new go-to response in the 21st century for any question involving something new machines can do better than humans, and we need to try to wrap our heads around it.

We need to recognize what it means for exponential technological change to be entering the labor market space for nonroutine jobs for the first time ever. Machines that can learn mean nothing humans do as a job is uniquely safe anymore. From hamburgers to healthcare, machines can be created to successfully perform such tasks with no need or less need for humans, and at lower costs than humans.

Amelia is just one AI out there currently being beta-tested in companies right now. Created by IPsoft over the past 16 years, she’s learned how to perform the work of call center employees. She can learn in seconds what takes us months, and she can do it in 20 languages. Because she’s able to learn, she’s able to do more over time. In one company putting her through the paces, she successfully handled one of every ten calls in the first week, and by the end of the second month, she could resolve six of ten calls. Because of this, it’s been estimated that she can put 250 million people out of a job, worldwide.

Viv is an AI coming soon from the creators of Siri who’ll be our own personal assistant. She’ll perform tasks online for us, and even function as a Facebook News Feed on steroids by suggesting we consume the media she’ll know we’ll like best. In doing all of this for us, we’ll see far fewer ads, and that means the entire advertising industry — that industry the entire Internet is built upon — stands to be hugely disrupted.

A world with Amelia and Viv — and the countless other AI counterparts coming online soon — in combination with robots like Boston Dynamics’ next generation Atlas portends, is a world where machines can do all four types of jobs and that means serious societal reconsiderations. If a machine can do a job instead of a human, should any human be forced at the threat of destitution to perform that job? Should income itself remain coupled to employment, such that having a job is the only way to obtain income, when jobs for many are entirely unobtainable? If machines are performing an increasing percentage of our jobs for us, and not getting paid to do them, where does that money go instead? And what does it no longer buy? Is it even possible that many of the jobs we’re creating don’t need to exist at all, and only do because of the incomes they provide? These are questions we need to start asking, and fast.

DECOUPLING INCOME FROM WORK

Fortunately, people are beginning to ask these questions, and there’s an answer that’s building up momentum. The idea is to put machines to work for us, but empower ourselves to seek out the forms of remaining work we as humans find most valuable, by simply providing everyone a monthly paycheck independent of work. This paycheck would be granted to all citizens unconditionally, and its name is universal basic income. By adopting UBI, aside from immunizing against the negative effects of automation, we’d also be decreasing the risks inherent in entrepreneurship, and the sizes of bureaucracies necessary to boost incomes. It’s for these reasons, it has cross-partisan support, and is even now in the beginning stages of possible implementation in countries like Switzerland, Finland, the Netherlands, and Canada.

The future is a place of accelerating changes. It seems unwise to continue looking at the future as if it were the past, where just because new jobs have historically appeared, they always will. The WEF started 2016 off by estimating the creation by 2020 of 2 million new jobs alongside the elimination of 7 million. That’s a net loss, not a net gain of 5 million jobs. In a frequently cited paper, an Oxford study estimated the automation of about half of all existing jobs by 2033. Meanwhile self-driving vehicles, again thanks to machine learning, have the capability of drastically impacting all economies — especially the US economy as I wrote last year about automating truck driving — by eliminating millions of jobs within a short span of time.

And now even the White House, in a stunning report to Congress, has put the probability at 83 percent that a worker making less than $20 an hour in 2010 will eventually lose their job to a machine. Even workers making as much as $40 an hour face odds of 31 percent. To ignore odds like these is tantamount to our now laughable “duck and cover” strategies for avoiding nuclear blasts during the Cold War.

All of this is why it’s those most knowledgeable in the AI field who are now actively sounding the alarm for basic income. During a panel discussion at the end of 2015 at Singularity University, prominent data scientist Jeremy Howard asked “Do you want half of people to starve because they literally can’t add economic value, or not?” before going on to suggest, ”If the answer is not, then the smartest way to distribute the wealth is by implementing a universal basic income.”

AI pioneer Chris Eliasmith, director of the Centre for Theoretical Neuroscience, warned about the immediate impacts of AI on society in an interview with Futurism, “AI is already having a big impact on our economies… My suspicion is that more countries will have to follow Finland’s lead in exploring basic income guarantees for people.”

Moshe Vardi expressed the same sentiment after speaking at the 2016 annual meeting of the American Association for the Advancement of Science about the emergence of intelligent machines, “we need to rethink the very basic structure of our economic system… we may have to consider instituting a basic income guarantee.”

Even Baidu’s chief scientist and founder of Google’s “Google Brain” deep learning project, Andrew Ng, during an onstage interview at this year’s Deep Learning Summit, expressed the shared notion that basic income must be “seriously considered” by governments, citing “a high chance that AI will create massive labor displacement.”

When those building the tools begin warning about the implications of their use, shouldn’t those wishing to use those tools listen with the utmost attention, especially when it’s the very livelihoods of millions of people at stake? If not then, what about when Nobel prize winning economists begin agreeing with them in increasing numbers?

No nation is yet ready for the changes ahead. High labor force non-participation leads to social instability, and a lack of consumers within consumer economies leads to economic instability. So let’s ask ourselves, what’s the purpose of the technologies we’re creating? What’s the purpose of a car that can drive for us, or artificial intelligence that can shoulder 60% of our workload? Is it to allow us to work more hours for even less pay? Or is it to enable us to choose how we work, and to decline any pay/hours we deem insufficient because we’re already earning the incomes that machines aren’t?

What’s the big lesson to learn, in a century when machines can learn?

I offer it’s that jobs are for machines, and life is for people.

This Selfless Supermom Has Decided To Give Birth To Her Baby With An Underdeveloped Brain And Donate Her Organs

Keri Young can feel her baby’s kick, hear her heartbeat like any other mother carrying around her baby. But unlike other mothers, however, she won’t get to see her daughter grow up.

The condition is an untreatable one where the brain of the embryo does not develop appropriately. It’s so rare that it only occurs in six births per every 1,000 births in the UK. Sadly, babies born with this condition tend to only tend to survive for a few days.

Despite being told this 30 seconds after being diagnosed with Eva, Keri asked the doctors if it she could possibly continue with the pregnancy so that her organs could help save the life other babies who are in desperate need of organs.

On a Facebook post, Keri wrote: “This is our daughter’s perfect heart. She has perfect feet and hands. She has perfect kidneys, perfect lungs, and a perfect liver. Sadly, she doesn’t have a perfect brain.”

Her husband, Royce Young, praised his wife and their son, Harrison, in a post he shared last Friday, which received thousands of comments from people who were touched by their story.

“In literally the worst moment of her life, finding out her baby was going to die, it took her less than a minute to think of someone else and how her selflessness could help. It’s one of the most powerful things I’ve ever experienced.”

“Whenever Harrison gets hurt or has to pull a band-aid off or something, Keri will ask him, “Are you tough? Are you BRAVE?” And that little boy will nod his head and say, “I tough! I brave!” I’m looking at Keri right now and I don’t even have to ask. She’s TOUGH. She’s BRAVE. She’s incredible. She’s remarkable.”

“Not that I needed some awful situation like this to see all of that, but what it did was make me want to tell everyone else about it,” states Harrison.

Physicists Have Modeled the Existence of Newly Discovered Tetraneutrons

IN BRIEF

- A new study has confirmed previous research on the existence of tetraneurons — a particle with more than two neutrons.

- The research challenges the current model of particle physics and means we may have to reexamine how we look at nuclear forces like neutron stars.

SIMULATING THE IMPOSSIBLE

Back in February, physicists from Japan presented evidence that they may have generated tetraneutrons, a particle consisting of four neutrons. But we had no model that revealed how they could possibly exist.

Until now.

Now, an international team of scientists have used sophisticated supercomputer simulations to show how a tetraneutron could be quasi stable, revealing data that matched with the previous Japanese experiment, according to a new study published in Physical Review Letters.

This is a remarkable find. First, neutrons on their own aren’t very stable — they decay into protons in 10 minutes. Second, Pauli’s Exclusion Principle dictates that particles in the same system cannot be in the same quantum state, meaning two, three, or four neutron systems aren’t theoretically possible.

But the simulations in the study confirm the possibility of tetraneutrons. They show how four neutron systems could be quasi-stable, and would have a life of merely 5 × 10-22 seconds. That may not seem like much, but it’s long enough for the particle to be studied.

The simulation matches neatly with the findings of the Japanese experiment. That experiment was bombarding Helium-8 beams with Helium-4 atoms that turned the Helium-8 into Helium-4, with four leftover neutrons.

Those four neutrons are thought of to become a tetraneutron briefly — about one billionth of a trillionth of a second — before decaying into four separate neutrons.

NEW PHYSICS

If the existence of the tetraneutron is proven true, that would mean we’d have to examine how we look at nuclear forces. Current models dictate the need for a proton to hold together a nucleus. The existence of the tetraneutron means there is a yet undiscovered interneutron force.

But there’s a sort of precedent for clustering of neutrons. For instance, neutron stars are composed of neutrons, each one having 10^57 particles. Studying tetraneutrons would go a long way at explaining how a seven mile radius ball of neutrons forms and holds itself together.

A New Type of Atomic Bond Has Been Discovered

IN BRIEF

- Physicists have observed the butterfly Rydberg molecule for the first time ever, confirming a 14-year-old prediction, and the existence of a whole new type of atomic bond.

- These molecules could be used in the development of molecular-scale electronics and machines because they require less energy to move.

For the first time, physicists have observed a strange molecule called the butterfly Rydberg molecule – a weak pairing of highly excitable atoms that was first predicted back in 2002.

The find not only confirms a 14-year-old prediction – it also confirms the existence of a whole new type of atomic bond.

Rydberg molecules form when an electron is kicked far from an atom’s nucleus, making them super electronically excited.

On their own, they’re common enough. But back in 2002, a team of researchers from Purdue University in Indiana predicted that a Rydberg molecule could attract and bind to another atom – something that was thought impossible according to our understanding of how atoms bind at the time.

They called that hypothetical molecule combination the butterfly Rydberg molecule, because of the butterfly-like distribution of the orbiting electrons.

And now, 14 years later, the same team has finally observed a butterfly Rydberg molecule in the lab, and in the process, has discovered a whole new type of weak atomic bond.

“This new binding mechanism, in which an electron can grab and trap an atom, is really new from the point of view of chemistry,” explained lead researcher Chris Greene. “It’s a whole new way an atom can be bound by another atom.”

Rydberg molecules are unique because they can have electrons that are between 100-1,000 times further away from the nucleus than normal.

The team was able to create them for this experiment by cooling Rubidium gas to a temperature of 100 nano-Kelvin – one ten-millionth of a degree above absolute zero – then exciting the atoms into a Rydberg state using lasers.

The team kept these Rydberg molecules under observation to see if they could indeed attract another atom. They were looking for any changes in the frequency of light the molecules could absorb, which would be a sign that an energy binding had occurred.

Eventually, they discovered that the distant electrons could indeed help attract and bind with other atoms, just as they had predicted in 2002.

“This [distant] electron is like a sheepdog,” said Greene. “Every time it whizzes past another atom, this Rydberg atom adds a little attraction and nudges it toward one spot until it captures and binds the two atoms together.”

“It’s a really clear demonstration that this class of molecules exist,” he added.

These special butterfly Rydberg molecules are substantially larger than normal molecules due to their distantly orbiting electrons, and now that we know they exist, they could be used in the development of molecular-scale electronics and machines because they require less energy to move.

“The main excitement about this work in the atomic and molecular physics community has related to the fact that these huge molecules should exist and be observable, and that their electron density should exhibit amazingly rich, quantum mechanical peaks and valleys,” Greene told The Telegraph’s Roger Highfield in 2012.

What Is Intelligence?

Einstein said, “The true sign of intelligence is not knowledge but imagination.” Socrates said, “I know that I am intelligent, because I know that I know nothing.” For centuries, philosophers have tried to pinpoint the true measure of intelligence. More recently, neuroscientists have entered the debate, searching for answers about intelligence from a scientific perspective: What makes some brains smarter than others? Are intelligent people better at storing and retrieving memories? Or perhaps their neurons have more connections allowing them to creatively combine dissimilar ideas? How does the firing of microscopic neurons lead to the sparks of inspiration behind the atomic bomb? Or to Oscar Wilde’s wit?

Uncovering the neural networks involved in intelligence has proved difficult because, unlike, say, memory or emotions, there isn’t even a consensus as to what constitutes intelligence in the first place. It is widely accepted that there are different types of intelligence—analytic, linguistic, emotional, to name a few—but psychologists and neuroscientists disagree over whether these intelligences are linked or whether they exist independently from one another.

The 20th century produced three major theories on intelligence. The first, proposed by Charles Spearman in 1904, acknowledged that there are different types of intelligence but argued that they are all correlated—if people tend do well on some sections of an IQ test, they tend to do well on all of them, and vice versa. So Spearman argued for a general intelligence factor called “g,” which remains controversial to this day. Decades later, Harvard psychologist Howard Gardner revised this notion with his Theory of Multiple Intelligences, which set forth eight distinct types of intelligence and claimed that there need be no correlation among them; a person could possess strong emotional intelligence without being gifted analytically. Later in 1985, Robert Sternberg, the former dean of Tufts, put forward his Triarchic Theory of Intelligence, which argued that previous definitions of intelligence are too narrow because they are based solely on intelligences that can be assessed in IQ test. Instead, Sternberg believes types of intelligence are broken down into three subsets: analytic, creative, and practical.

Dr. Gardner sat down with Big Think for a video interview and told us more about his Theory of Multiple Intelligences. He argues that these various forms of intelligence wouldn’t have evolved if they hadn’t been beneficial at some point in human history, but what was important in one time is not necessarily important in another. “As history unfolds, as cultures evolve, of course the intelligences which they value change,” Gardner tells us. “Until a hundred years ago, if you wanted to have h igher education, linguistic intelligence was important. I teach at Harvard, and 150 years ago, the entrance exams were in Latin, Greek and Hebrew. If, for example, you were dyslexic, that would be very difficult because it would be hard for you to learn those languages, which are basically written languages.” Now, mathematical and emotional intelligences are more important in society, Gardner says: “While your IQ, which is sort of language logic, will get you behind the desk, if you don’t know how to deal with people, if you don’t know how to read yourself, you’re going to end up just staying at that desk forever or eventually being asked to make room for somebody who does have social or emotional intelligence.”

Big Think also interviewed Dr. Daniel Goleman, author of the bestselling “Emotional Intelligence,” and spoke with him about his theory of emotional intelligence, which comprises four major poles: self-awareness, self-management, social awareness, and relationship management.

| Takeaway

Conflicts about the nature of intelligence have hampered studies about its neurobiological underpinnings for years. Yet neuroscientists Rex Jung and Richard Haier may have found a way around this impasse. They published a study in 2007 that reviewed 37 different neuro-imaging studies of IQ (each with a different definition of intelligence) in an attempt to locate what parts of the brain were involved. As it turns out, regardless of the definition used, the results were very similar, enough so that they were able to map a network of brain areas associated with increased IQ scores. Known as the Parieto-Frontal Integration Theory, this model has been gaining momentum among neuroscientists. Earlier this year a team of researchers from Caltech, the University of Iowa, and USC surveyed the IQ test results from 241 brain-lesion patients. Comparing the locations of the brain lesion to their scores on the tests, they were able to discover which parts of the brain were associated with different types of intelligence. And their findings were very much in line with this parieto-frontal integration theory. |

How to handle sleep deprivation, according to a Navy SEAL

Everybody always says the same thing when you announce you’re expecting: “Better catch up on your rest!” Or, “Sleep in while you still can!”

Or even worse, “I’m your carefree single friend who stays out until 2 AM and then goes to brunch!”

All of them also think they’re sharing a secret, as if they’re frontline soldiers watching new recruits get rotated to the front. These people are incredibly annoying. Or maybe they’re not. Who knows, you’re in a groggy, sleep-deprived haze.

How you deal with sleep deprivation defines your first years as a parent. If there’s anyone who knows a thing or 2 about propping up sagging eyelids, it’s John McGuire.

A Former Navy SEAL, he not only survived Hell Week — that notorious 5-day suffer-fest in which aspiring SEALs are permitted a total of only 4 hours of sleep — but also the years of sleep deprivation that come with being a father of 5. McGuire, who’s also an in-demand motivational speaker and founder of the SEAL Team Physical Training program, offered some battle-tested strategies on how to make it through the ultimate Hell Week.

Or as you call it, “having a newborn.”

Get your head right

It doesn’t matter if it’s a live SEAL team operation or an average day with a baby, the most powerful tactic is keeping your wits about you.

“You can’t lose your focus or discipline,” McGuire says. In other words, the first step is to simply believe you have what it takes best the challenge ahead. “Self-doubt destroys more dreams than failure ever has.”

This applies to CEOs, heads of households, and operatives who don’t exist undertaking missions that never happened taking out targets whose the Pentagon will not confirm.

Teamwork makes the lack of sleep work

“In the field, lack of communication can get someone killed,” says McGuire.

And while you might not be facing the same stress during a midnight diaper blowout as you would canvassing for an IED, the same rules apply: remain calm and work as a team.

Tempers will flare, but the last thing that you want, per McGuire, is for negativity to seep through.

One way to prevent this? Remind yourself: I didn’t get a lot of sleep but I love my family, so I’m going to really watch what I say. At least that’s what McGuire says.

And when communicating, be mindful of your current sleep-deprived state: “If you are, you’ll be more likely say something along the lines of, ‘Hey, I’m not feeling myself because I didn’t get enough sleep,’” he says.

Put the oxygen mask on yourself first

The more you can schedule your life – and, in particular, exercise – the better, says McGuire. And this is certainly a tactic that’s important with a newborn in the house.

“It’s like on an airplane: You need to place the oxygen mask on yourself first before you can put one on your kid.”

Exercise reduces stress, helps you sleep better, and get the endorphins pumping.

“You can hold your baby and do squats if you want,” he says. “It’s not as much about the squats as making sure you exercise and clear the mind.” Did your hear that, maggot!?

Don’t try to be a hero

McGuire has heard people say that taking naps longer than 20 minutes will make you more tired than before you nap. Tell that to a SEAL (or a new dad). McGuire has seen guys sleep on wood pallets on an airplane flying through lightning and turbulence. He once saw a guy fall asleep standing up. The point is, sleep when you can, wherever you can, for as long you can. “Sleep is like water: you need it when you need it.”

Know your limits

Lack of proper sleep effects leads to more than under-eye bags: your patience plummets, you’re more likely to gorge on unhealthy foods, and, well, you’re kind of a dummy.

So pay attention to what you shouldn’t do as much as what you should. “A good leader makes decisions to improve things, not make them worse,” says McGuire. “If you’re in bad shape, you could fall asleep at the wheel, you can harm your child. You’ve got to take care of yourself.”

Embrace the insanity

It would be cute if this next sentiment came from training, but it’s probably more a function of McGuire the Dad than McGuire the SEAL: Embrace the challenge because it won’t last long.

Even McGuire’s brood of 5, which at some point may have seemed they may never grow up, have.

“You learn a lot about people and yourself through your children,” he says. “Have lots of adventures. Take lots of pictures and give lots of hugs,” he says. It won’t last forever — and you’ll have plenty of time to sleep when it’s over.

The first woman to visit every country in the world has released her 10 favourite destinations

Cassanda De Pecol has just broken the Guinness World Record to become:

- The first documented woman to travel to every sovereign nation

- The first American woman to travel to every sovereign nation

- The youngest American to travel to every sovereign nation (at 27)

- The fastest person to travel to every sovereign nation

In case you were wondering, there are 196 sovereign nations on the planet to date.

Beginning when Cassie was 23 on the Pacific island of Palau in July 2015, the trip lasted 18 months and 26 days – half the time of the previous Guinness World Record.

Her journey, Expedition196, was almost entirely funded by sponsorship, has been an installation in sustainability and ethical eco-friendly tourism.

Now I know we’ve all had enough of experts, but if you were going to take travel tips from anyone…

These are, according to De Pecol, the top ten best countries in the entire world to visit, and why:

10. USA

Fall in New England is something everyone should experience.

They say home is where the heart is, and the more I travel, the more that’s becoming more real to me.

Home is where family is, it’s where my safety net is, it’s where everything that I’m familiar with is, and my country is rich in nature, which is important to me.

9. Costa Rica, Central America

Monkeys, fresh fruit, good music and volcanoes…need I say more?

8. Peru, South America

The Amazon rainforest and Aguas Calientes [gateway to Machu Picchu].

De Pecol also spent some time living in Peru.

7. Tunisia, North Africa

To experience northern African culture with a Middle Eastern feel and an immense amount of archaeological history.

According to De Pecol, the town of Sidi Bou Said (20km from Tunis, the capital) “blew me away”.

6. Oman, Arabian Peninsula

To immerse yourself in the desert and mountains, while learning from locals who live in the mountains, it’s a whole different lifestyle.

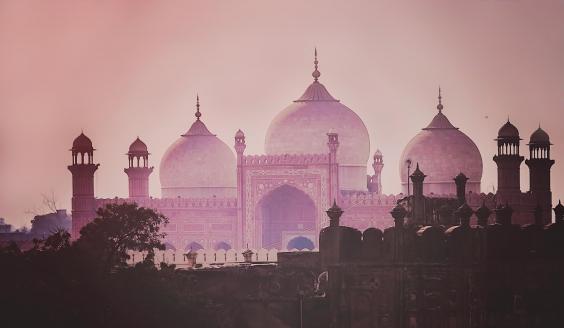

5. Pakistan

To get a true sense of raw, authentic Asian culture, and for the food.

De Pecol especially appreciated Pakistan, because she had to wait four months for her visa approval.

4. Vanuatu, South Pacific

To experience the process of how Kava is made and to meet some of the kindest people.

3. Maldives

To see some of the bluest water, whitest sand and most stunning sand banks in the world.

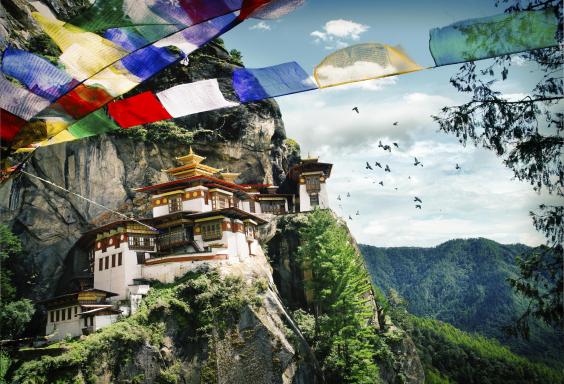

2. Bhutan

To learn the ethics of peaceful living.

The pilgrimage [to Paro Taktsang] was something out of Avatar, a dream to trek through low-hanging clouds with a harrowing drop at any given moment on either side.

Prayer flags swayed through the pines, prayer wheels spinning in the breeze, and tsa-tsas (ashes of the dead) wedged between crevices of stone.

1. Mongolia

To be immersed in the remote wilderness and to ride the wild horses.

Officials: Fukushima Has Now Contaminated 1/3 Of The Worlds Oceans

The Pacific Ocean is thought to have been contaminated from the leak out from the Fukushima Nuclear Disaster. The entire Pacific Ocean, which covers almost one-third of our planet, is now thought to have been contaminated by the radioactive leak from the Fukushima Nuclear Disaster, according to researchers.

The International Atomic Energy Agency (IAEA), seeking to promote the peaceful use of Nuclear Power, in 2011 established with the Regional Cooperative Agreement (RCA) Member States, a joint IAEA Technical Cooperation (TC) project in the region of the Pacific Ocean.It was established after the Fukushima disaster when a tsunami caused by a major earthquake on 11 March 2011, disabled the power supply and cooling of three Fukushima Daiichi reactors, causing a nuclear accident.

As a result, a large quantity of radioactive material was admitted into the Pacific Ocean.Of no surprise, this caused great concern to countries based around the Pacific Ocean due to the potential economic and environmental implications. The TC project’s aim was, therefore, to monitor the presence of radioactive substances in the marine environment.SCROLL DOWN FOR VIDEOThe first annual review meeting held in August 2012 demonstrated predictive hydrodynamic models and they predicted that the strong current, known as the Kuroshio Current and its extension, had the ability to transport the radioactive substances across the Pacific Ocean in an easterly direction.

However, the concentration of radioactivity was not as high as originally thought. © press A field study found that two filter cartridges were coated, which showed elements of cesium, a radioactive substance. The massive expansion of ocean had diluted it substantially so radioactivity remained at low levels but there was still concern over contamination of seafood even at these low levels.

The marine monitoring project was therefore, established to ensure that the seafood of the region was safe for consumption and to maintain a comprehensive overview and full facts of the situation, considering its grave implications. The TC is due to conclude this year. A few results have caused concern. A field study they conducted on 2 July 2014, revealed from two sets of seawater samples, found that two filter cartridges were coated, which showed elements of cesium, a radioactive substance.Then recently, trace amounts of cesium-134 and cesium-137 turned up in samples collected near Vancouver Island in British Columbia. The samples collected were separate from the monitoring project set up by IAEA but it is thought the only possible source of these radioactive elements is Fukushima, according to the Integrated Fukushima Ocean Radionuclide Monitoring (InFORM) Network.

This is the first time that traces of cesium-134 had been detected near North America .While these are trace amounts, the danger of radioactive material in any amount cannot be underestimated. However, the experts say that these levels detected cannot really harm us, they are still lower than those we could be exposed to from a dental x-ray for instance.Having said that, every possible exposure, in any small amount, adds up.

The problem with nuclear energy and fallout, the radiation and radioactive materials can travel far and wide with the wind and with the sea. Therefore, we should aim on a global level to keep these levels at zero. In any event the continuous monitoring of oceans will need to be conducted, according to Ken Buesseler, a marine chemist at Woods Hole Oceanographic Institute.What Buesseler says should be taken on board beyond 2015, particularly since the advice from the IAEA is to dump even more contaminated water into the sea.

This is apparently more desirable than holding it in tanks. Any discharge will have to be controlled and continuous monitoring would be needed, in particular near the plant to improve data reliability. This is causing concern and not just to state authorities. Consider the fishermen. Every time they catch fish in the ocean, the fish need to be tested for radioactivity.Before any further dumping is done, the IAEA and Tokyo Electric Power Co., who control the plant, need to consider not only the environmental impact but socio-economic impact as well.

Livelihoods could be affected as well as long-term health of the region and global community eventually.

Watch the video. URL:https://youtu.be/cCB_U5hLBoc