Moore’s Law

Will the End of Moore’s Law Halt Computing’s Exponential Rise?

Much of the future we envision today depends on the exponential trends commonly do.” –Ray Kurzweil, The Singularity Is Near

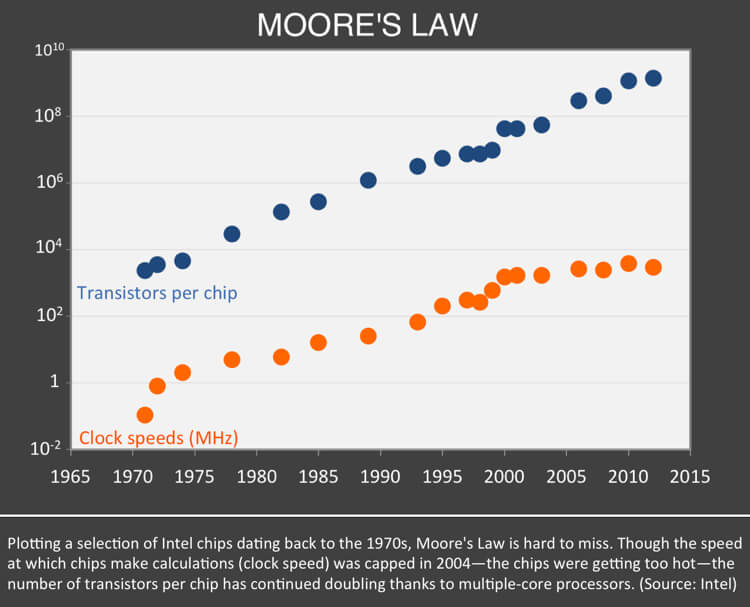

Much of the future we envision today depends on the exponential progress of information technology, most popularly illustrated by Moore’s Law. Thanks to shrinking processors, computers have gone from plodding, room-sized monoliths to the quick devices in our pockets or on our wrists. Looking back, this accelerating progress is hard to miss—it’s been amazingly consistent for over five decades.

But how long will it continue?

This post will explore Moore’s Law, the five paradigms of computing (as described by Ray Kurzweil), and the reason many are convinced that exponential trends in computing will not end anytime soon.

What Is Moore’s Law?

“In brief, Moore’s Law predicts that computing chips will shrink by half in size and cost every 18 to 24 months. For the past 50 years it has been astoundingly correct.” –Kevin Kelly, What Technology Wants

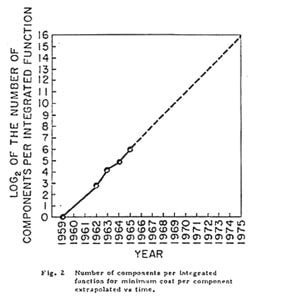

In 1965, Fairchild Semiconductor’s Gordon Moore (later cofounder of Intel) had been closely watching early integrated circuits. He realized that as components were getting smaller, the number that could be crammed on a chip was regularly rising and processing power along with it.

Based on just five data points dating back to 1959, Moore estimated the time it took to double the number of computing elements per chip was 12 months (a number he later revised to 24 months), and that this steady exponential trend would result in far more power for less cost.

Soon it became clear Moore was right, but amazingly, this doubling didn’t taper off in the mid-70s—chip manufacturing has largely kept the pace ever since. Today, affordable computer chips pack a billion or more transistors spaced nanometers apart.

Moore’s Law has been solid as a rock for decades, but the core technology’s ascent won’t last forever. Many believe the trend is losing steam, and it’s unclear what comes next.

Experts, including Gordon Moore, have noted Moore’s Law is less a law and more a self-fulfilling prophecy, driven by businesses spending billions to match the expected exponential pace. Since 1991, the semiconductor industry has regularly produced a technology roadmap to coordinate their efforts and spot problems early.

In recent years, the chipmaking process has become increasingly complex and costly. After processor speeds leveled off in 2004 because chips were overheating, multiple-core processors took the baton. But now, as feature sizes approach near-atomic scales, quantum effects are expected to render chips too unreliable.

This year, for the first time, the semiconductor industry roadmap will no longer use Moore’s Law as a benchmark, focusing instead on other attributes, like efficiency and connectivity, demanded by smartphones, wearables, and beyond.

As the industry shifts focus, and Moore’s Law appears to be approaching a limit, is this the end of exponential progress in computing—or might it continue awhile longer?

Moore’s Law Is the Latest Example of a Larger Trend

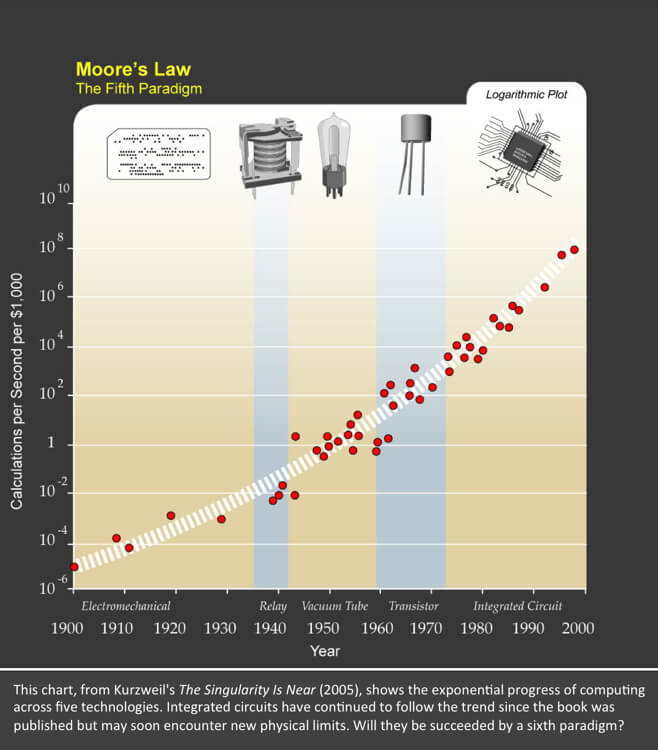

“Moore’s Law is actually not the first paradigm in computational systems. You can see this if you plot the price-performance—measured by instructions per second per thousand constant dollars—of forty-nine famous computational systems and computers spanning the twentieth century.” –Ray Kurzweil, The Singularity Is Near

While exponential growth in recent decades has been in integrated circuits, a larger trend is at play, one identified by Ray Kurzweil in his book, The Singularity Is Near. Because the chief outcome of Moore’s Law is more powerful computers at lower cost, Kurzweil tracked computational speed per $1,000 over time.

This measure accounts for all the “levels of ‘cleverness’” baked into every chip—such as different industrial processes, materials, and designs—and allows us to compare other computing technologies from history. The result is surprising.

The exponential trend in computing began well before Moore noticed it in integrated circuits or the industry began collaborating on a roadmap. According to Kurzweil, Moore’s Law is the fifth computing paradigm. The first four include computers using electromechanical, relay, vacuum tube, and discrete transistor computing elements.

There May Be ‘Moore’ to Come

“When Moore’s Law reaches the end of its S-curve, now expected before 2020, the exponential growth will continue with three-dimensional molecular computing, which will constitute the sixth paradigm.” –Ray Kurzweil, The Singularity Is Near

While the death of Moore’s Law has been often predicted, it does appear that today’s integrated circuits are nearing certain physical limitations that will be challenging to overcome, and many believe silicon chips will level off in the next decade. So, will exponential progress in computing end too? Not necessarily, according to Kurzweil.

The integrated circuits described by Moore’s Law, he says, are just the latest technology in a larger, longer exponential trend in computing—one he thinks will continue. Kurzweil suggests integrated circuits will be followed by a new 3D molecular computing paradigm (the sixth) whose technologies are now being developed. (We’ll explore candidates for potential successor technologies to Moore’s Law in future posts.)

Further, it should be noted that Kurzweil isn’t predicting that exponential growth in computing will continue forever—it will inevitably hit a ceiling. Perhaps his most audacious idea is the ceiling is much further away than we realize.

How Does This Affect Our Lives?

Computing is already a driving force in modern life, and its influence will only increase. Artificial intelligence, automation, robotics, virtual reality, unraveling the human genome—these are a few world-shaking advances computing enables.

If we’re better able to anticipate this powerful trend, we can plan for its promise and peril, and instead of being taken by surprise, we can make the most of the future.

Kevin Kelly puts it best in What Technology Wants:

“Imagine it is 1965. You’ve seen the curves Gordon Moore discovered. What if you believed the story they were trying to tell us…You would have needed no other prophecies, no other predictions, no other details to optimize the coming benefits. As a society, if we just believed that single trajectory of Moore’s, and none other, we would have educated differently, invested differently, prepared more wisely to grasp the amazing powers it would sprout.”

Nevermind Moore’s Law: Transistors Just Got A Whole Lot Smaller

IN BRIEF

- Researchers have successfully created a transistor 50,000 times smaller than a strand of hair.

- Surging past Moore’s Law, these transistors could be an exponential leap forward in computing capabilities.

THE SMALLER, THE BETTER

Transistors are semiconductors that work as the building blocks of modern computer hardware. Already very small, smaller transistors are an important part of improving computer technology. That’s what a team from the Department of Energy’s Lawrence Berkeley National Laboratory managed to do, according to a study published in the journal Science.

Current transistors in use are in 14nm scale technology, with 10nm semiconductors expected in 2017 or 2018, supposedly in Intel’s Cannonlake line — a trend following Intel co-founder Gordon Moore’s prediction that transistor density on integrated circuits would double every two years, improving computer electronics.

Berkeley Lab’s team seems to have beaten them into it, developing a functional 1nm transistor gate.

KEEPING UP WITH MOORE’S LAW

“We made the smallest transistor reported to date,” says lead scientist Ali Javey.“The gate length is considered a defining dimension of the transistor. We demonstrated a 1-nanometer-gate transistor, showing that with the choice of proper materials, there is a lot more room to shrink our electronics.”

Silicon-based transistors function optimally at 7nm but fail below 5nm, where electrons start experiencing a severe short channel effect called quantum tunneling. Supposedly, silicon allows for lighter electrons, moving with less resistance. This, however, makes 5nm gates too thin to control electron flow and keep them in the intended logic state. “This means we can’t turn off the transistors,” said researcher Sujay Desai. “The electrons are out of control.”

The Berkeley Labs team found a better material in molybdenum disulfide (MoS2). Electrons flowing through MoS2 are heavier, making them easier to control even at smaller gate sizes. MoS2 is also more capable of storing energy in an electric field. Combined with carbon nanotubes with diameters as small as 1nm, this allowed for the shortest transistors ever.

“However, it’s a proof of concept. We have not yet packed these transistors onto a chip, and we haven’t done this billions of times over. We also have not developed self-aligned fabrication schemes for reducing parasitic resistances in the device,” Javey admits.

Still, its foundational work that keeps alive Moore’s Law a little longer.

The chips are down for Moore’s law

The semiconductor industry will soon abandon its pursuit of Moore’s law. Now things could get a lot more interesting.

Next month, the worldwide semiconductor industry will formally acknowledge what has become increasingly obvious to everyone involved: Moore’s law, the principle that has powered the information-technology revolution since the 1960s, is nearing its end.

A rule of thumb that has come to dominate computing, Moore’s law states that the number of transistors on a microprocessor chip will double every two years or so — which has generally meant that the chip’s performance will, too. The exponential improvement that the law describes transformed the first crude home computers of the 1970s into the sophisticated machines of the 1980s and 1990s, and from there gave rise to high-speed Internet, smartphones and the wired-up cars, refrigerators and thermostats that are becoming prevalent today.

None of this was inevitable: chipmakers deliberately chose to stay on the Moore’s law track. At every stage, software developers came up with applications that strained the capabilities of existing chips; consumers asked more of their devices; and manufacturers rushed to meet that demand with next-generation chips. Since the 1990s, in fact, the semiconductor industry has released a research road map every two years to coordinate what its hundreds of manufacturers and suppliers are doing to stay in step with the law — a strategy sometimes called More Moore. It has been largely thanks to this road map that computers have followed the law’s exponential demands.

Not for much longer. The doubling has already started to falter, thanks to the heat that is unavoidably generated when more and more silicon circuitry is jammed into the same small area. And some even more fundamental limits loom less than a decade away. Top-of-the-line microprocessors currently have circuit features that are around 14 nanometres across, smaller than most viruses. But by the early 2020s, says Paolo Gargini, chair of the road-mapping organization, “even with super-aggressive efforts, we’ll get to the 2–3-nanometre limit, where features are just 10 atoms across. Is that a device at all?” Probably not — if only because at that scale, electron behaviour will be governed by quantum uncertainties that will make transistors hopelessly unreliable. And despite vigorous research efforts, there is no obvious successor to today’s silicon technology.

The industry road map released next month will for the first time lay out a research and development plan that is not centred on Moore’s law. Instead, it will follow what might be called the More than Moore strategy: rather than making the chips better and letting the applications follow, it will start with applications — from smartphones and supercomputers to data centres in the cloud — and work downwards to see what chips are needed to support them. Among those chips will be new generations of sensors, power-management circuits and other silicon devices required by a world in which computing is increasingly mobile.

The changing landscape, in turn, could splinter the industry’s long tradition of unity in pursuit of Moore’s law. “Everybody is struggling with what the road map actually means,” says Daniel Reed, a computer scientist and vice-president for research at the University of Iowa in Iowa City. The Semiconductor Industry Association (SIA) in Washington DC, which represents all the major US firms, has already said that it will cease its participation in the road-mapping effort once the report is out, and will instead pursue its own research and development agenda.

Everyone agrees that the twilight of Moore’s law will not mean the end of progress. “Think about what happened to airplanes,” says Reed. “A Boeing 787 doesn’t go any faster than a 707 did in the 1950s — but they are very different airplanes”, with innovations ranging from fully electronic controls to a carbon-fibre fuselage. That’s what will happen with computers, he says: “Innovation will absolutely continue — but it will be more nuanced and complicated.”

Laying down the law

The 1965 essay1 that would make Gordon Moore famous started with a meditation on what could be done with the still-new technology of integrated circuits. Moore, who was then research director of Fairchild Semiconductor in San Jose, California, predicted wonders such as home computers, digital wristwatches, automatic cars and “personal portable communications equipment” — mobile phones. But the heart of the essay was Moore’s attempt to provide a timeline for this future. As a measure of a microprocessor’s computational power, he looked at transistors, the on–off switches that make computing digital. On the basis of achievements by his company and others in the previous few years, he estimated that the number of transistors and other electronic components per chip was doubling every year.

Moore, who would later co-found Intel in Santa Clara, California, underestimated the doubling time; in 1975, he revised it to a more realistic two years2. But his vision was spot on. The future that he predicted started to arrive in the 1970s and 1980s, with the advent of microprocessor-equipped consumer products such as the Hewlett Packard hand calculators, the Apple II computer and the IBM PC. Demand for such products was soon exploding, and manufacturers were engaging in a brisk competition to offer more and more capable chips in smaller and smaller packages (see‘Moore’s lore’).

Source: Top, Intel; bottom, SIA/SRC

This was expensive. Improving a microprocessor’s performance meant scaling down the elements of its circuit so that more of them could be packed together on the chip, and electrons could move between them more quickly. Scaling, in turn, required major refinements in photolithography, the basic technology for etching those microscopic elements onto a silicon surface. But the boom times were such that this hardly mattered: a self-reinforcing cycle set in. Chips were so versatile that manufacturers could make only a few types — processors and memory, mostly — and sell them in huge quantities. That gave them enough cash to cover the cost of upgrading their fabrication facilities, or ‘fabs’, and still drop the prices, thereby fuelling demand even further.

Soon, however, it became clear that this market-driven cycle could not sustain the relentless cadence of Moore’s law by itself. The chip-making process was getting too complex, often involving hundreds of stages, which meant that taking the next step down in scale required a network of materials-suppliers and apparatus-makers to deliver the right upgrades at the right time. “If you need 40 kinds of equipment and only 39 are ready, then everything stops,” says Kenneth Flamm, an economist who studies the computer industry at the University of Texas at Austin.

To provide that coordination, the industry devised its first road map. The idea, says Gargini, was “that everyone would have a rough estimate of where they were going, and they could raise an alarm if they saw roadblocks ahead”. The US semiconductor industry launched the mapping effort in 1991, with hundreds of engineers from various companies working on the first report and its subsequent iterations, and Gargini, then the director of technology strategy at Intel, as its chair. In 1998, the effort became the International Technology Roadmap for Semiconductors, with participation from industry associations in Europe, Japan, Taiwan and South Korea. (This year’s report, in keeping with its new approach, will be called the International Roadmap for Devices and Systems.)

“The road map was an incredibly interesting experiment,” says Flamm. “So far as I know, there is no example of anything like this in any other industry, where every manufacturer and supplier gets together and figures out what they are going to do.” In effect, it converted Moore’s law from an empirical observation into a self-fulfilling prophecy: new chips followed the law because the industry made sure that they did.

And it all worked beautifully, says Flamm — right up until it didn’t.

Heat death

The first stumbling block was not unexpected. Gargini and others had warned about it as far back as 1989. But it hit hard nonetheless: things got too small.

“It used to be that whenever we would scale to smaller feature size, good things happened automatically,” says Bill Bottoms, president of Third Millennium Test Solutions, an equipment manufacturer in Santa Clara. “The chips would go faster and consume less power.”

But in the early 2000s, when the features began to shrink below about 90 nanometres, that automatic benefit began to fail. As electrons had to move faster and faster through silicon circuits that were smaller and smaller, the chips began to get too hot.

That was a fundamental problem. Heat is hard to get rid of, and no one wants to buy a mobile phone that burns their hand. So manufacturers seized on the only solutions they had, says Gargini. First, they stopped trying to increase ‘clock rates’ — how fast microprocessors execute instructions. This effectively put a speed limit on the chip’s electrons and limited their ability to generate heat. The maximum clock rate hasn’t budged since 2004.

Second, to keep the chips moving along the Moore’s law performance curve despite the speed limit, they redesigned the internal circuitry so that each chip contained not one processor, or ‘core’, but two, four or more. (Four and eight are common in today’s desktop computers and smartphones.) In principle, says Gargini, “you can have the same output with four cores going at 250 megahertz as one going at 1 gigahertz”. In practice, exploiting eight processors means that a problem has to be broken down into eight pieces — which for many algorithms is difficult to impossible. “The piece that can’t be parallelized will limit your improvement,” says Gargini.

Even so, when combined with creative redesigns to compensate for electron leakage and other effects, these two solutions have enabled chip manufacturers to continue shrinking their circuits and keeping their transistor counts on track with Moore’s law. The question now is what will happen in the early 2020s, when continued scaling is no longer possible with silicon because quantum effects have come into play. What comes next? “We’re still struggling,” says An Chen, an electrical engineer who works for the international chipmaker GlobalFoundries in Santa Clara, California, and who chairs a committee of the new road map that is looking into the question.

That is not for a lack of ideas. One possibility is to embrace a completely new paradigm — something like quantum computing, which promises exponential speed-up for certain calculations, or neuromorphic computing, which aims to model processing elements on neurons in the brain. But none of these alternative paradigms has made it very far out of the laboratory. And many researchers think that quantum computing will offer advantages only for niche applications, rather than for the everyday tasks at which digital computing excels. “What does it mean to quantum-balance a chequebook?” wonders John Shalf, head of computer-science research at the Lawrence Berkeley National Laboratory in Berkeley, California.

Material differences

A different approach, which does stay in the digital realm, is the quest to find a ‘millivolt switch’: a material that could be used for devices at least as fast as their silicon counterparts, but that would generate much less heat. There are many candidates, ranging from 2D graphene-like compoundsto spintronic materials that would compute by flipping electron spins rather than by moving electrons. “There is an enormous research space to be explored once you step outside the confines of the established technology,” says Thomas Theis, a physicist who directs the nanoelectronics initiative at the Semiconductor Research Corporation (SRC), a research-funding consortium in Durham, North Carolina.

“My bet is that we run out of money before we run out of physics.”

Unfortunately, no millivolt switch has made it out of the laboratory either. That leaves the architectural approach: stick with silicon, but configure it in entirely new ways. One popular option is to go 3D. Instead of etching flat circuits onto the surface of a silicon wafer, build skyscrapers: stack many thin layers of silicon with microcircuitry etched into each. In principle, this should make it possible to pack more computational power into the same space. In practice, however, this currently works only with memory chips, which do not have a heat problem: they use circuits that consume power only when a memory cell is accessed, which is not that often. One example is the Hybrid Memory Cube design, a stack of as many as eight memory layers that is being pursued by an industry consortium originally launched by Samsung and memory-maker Micron Technology in Boise, Idaho.

Microprocessors are more challenging: stacking layer after layer of hot things simply makes them hotter. But one way to get around that problem is to do away with separate memory and microprocessing chips, as well as the prodigious amount of heat — at least 50% of the total — that is now generated in shuttling data back and forth between the two. Instead, integrate them in the same nanoscale high-rise.

This is tricky, not least because current-generation microprocessors and memory chips are so different that they cannot be made on the same fab line; stacking them requires a complete redesign of the chip’s structure. But several research groups are hoping to pull it off. Electrical engineer Subhasish Mitra and his colleagues at Stanford University in California have developed a hybrid architecture that stacks memory units together with transistors made from carbon nanotubes, which also carry current from layer to layer3. The group thinks that its architecture could reduce energy use to less than one-thousandth that of standard chips.

Going mobile

The second stumbling block for Moore’s law was more of a surprise, but unfolded at roughly the same time as the first: computing went mobile.

Twenty-five years ago, computing was defined by the needs of desktop and laptop machines; supercomputers and data centres used essentially the same microprocessors, just packed together in much greater numbers. Not any more. Today, computing is increasingly defined by what high-end smartphones and tablets do — not to mention by smart watches and other wearables, as well as by the exploding number of smart devices in everything from bridges to the human body. And these mobile devices have priorities very different from those of their more sedentary cousins.

Keeping abreast of Moore’s law is fairly far down on the list — if only because mobile applications and data have largely migrated to the worldwide network of server farms known as the cloud. Those server farms now dominate the market for powerful, cutting-edge microprocessors that do follow Moore’s law. “What Google and Amazon decide to buy has a huge influence on what Intel decides to do,” says Reed.

Much more crucial for mobiles is the ability to survive for long periods on battery power while interacting with their surroundings and users. The chips in a typical smartphone must send and receive signals for voice calls, Wi-Fi, Bluetooth and the Global Positioning System, while also sensing touch, proximity, acceleration, magnetic fields — even fingerprints. On top of that, the device must host special-purpose circuits for power management, to keep all those functions from draining the battery.

The problem for chipmakers is that this specialization is undermining the self-reinforcing economic cycle that once kept Moore’s law humming. “The old market was that you would make a few different things, but sell a whole lot of them,” says Reed. “The new market is that you have to make a lot of things, but sell a few hundred thousand apiece — so it had better be really cheap to design and fab them.”

Both are ongoing challenges. Getting separately manufactured technologies to work together harmoniously in a single device is often a nightmare, says Bottoms, who heads the new road map’s committee on the subject. “Different components, different materials, electronics, photonics and so on, all in the same package — these are issues that will have to be solved by new architectures, new simulations, new switches and more.”

For many of the special-purpose circuits, design is still something of a cottage industry — which means slow and costly. At the University of California, Berkeley, electrical engineer Alberto Sangiovanni-Vincentelli and his colleagues are trying to change that: instead of starting from scratch each time, they think that people should create new devices by combining large chunks of existing circuitry that have known functionality4. “It’s like using Lego blocks,” says Sangiovanni-Vincentelli. It’s a challenge to make sure that the blocks work together, but “if you were to use older methods of design, costs would be prohibitive”.

Costs, not surprisingly, are very much on the chipmakers’ minds these days. “The end of Moore’s law is not a technical issue, it is an economic issue,” says Bottoms. Some companies, notably Intel, are still trying to shrink components before they hit the wall imposed by quantum effects, he says. But “the more we shrink, the more it costs”.

Every time the scale is halved, manufacturers need a whole new generation of ever more precise photolithography machines. Building a new fab line today requires an investment typically measured in many billions of dollars — something only a handful of companies can afford. And the fragmentation of the market triggered by mobile devices is making it harder to recoup that money. “As soon as the cost per transistor at the next node exceeds the existing cost,” says Bottoms, “the scaling stops.”

Many observers think that the industry is perilously close to that point already. “My bet is that we run out of money before we run out of physics,” says Reed.

Certainly it is true that rising costs over the past decade have forced a massive consolidation in the chip-making industry. Most of the world’s production lines now belong to a comparative handful of multinationals such as Intel, Samsung and the Taiwan Semiconductor Manufacturing Company in Hsinchu. These manufacturing giants have tight relationships with the companies that supply them with materials and fabrication equipment; they are already coordinating, and no longer find the road-map process all that useful. “The chip manufacturer’s buy-in is definitely less than before,” says Chen.

Take the SRC, which functions as the US industry’s research agency: it was a long-time supporter of the road map, says SRC vice-president Steven Hillenius. “But about three years ago, the SRC contributions went away because the member companies didn’t see the value in it.” The SRC, along with the SIA, wants to push a more long-term, basic research agenda and secure federal funding for it — possibly through the White House’s National Strategic Computing Initiative, launched in July last year.

That agenda, laid out in a report5 last September, sketches out the research challenges ahead. Energy efficiency is an urgent priority — especially for the embedded smart sensors that comprise the ‘Internet of things’, which will need new technology to survive without batteries, using energy scavenged from ambient heat and vibration. Connectivity is equally key: billions of free-roaming devices trying to communicate with one another and the cloud will need huge amounts of bandwidth, which they can get if researchers can tap the once-unreachable terahertz band lying deep in the infrared spectrum. And security is crucial — the report calls for research into new ways to build in safeguards against cyberattack and data theft.

These priorities and others will give researchers plenty to work on in coming years. At least some industry insiders, including Shekhar Borkar, head of Intel’s advanced microprocessor research, are optimists. Yes, he says, Moore’s law is coming to an end in a literal sense, because the exponential growth in transistor count cannot continue. But from the consumer perspective, “Moore’s law simply states that user value doubles every two years”. And in that form, the law will continue as long as the industry can keep stuffing its devices with new functionality.

The ideas are out there, says Borkar. “Our job is to engineer them.”

After Moore’s law

THE D-Wave 2X is a black box, 3.3 metres to a side, that looks a bit like a shorter, squatter version of the enigmatic monoliths from the film “2001: A Space Odyssey”. Its insides, too, are intriguing. Most of the space, says Colin Williams, D-Wave’s director of business development, is given over to a liquid-helium refrigeration system designed to cool it to 0.015 Kelvin, only a shade above the lowest temperature that is physically possible. Magnetic shielding protects the chip at the machine’s heart from ripples and fluctuations in the Earth’s magnetic field.

Such high-tech coddling is necessary because the D-Wave 2X is no ordinary machine; it is one of the world’s first commercially available quantum computers. In fact, it is not a full-blown computer in the conventional sense of the word, for it is limited to one particular area of mathematics: finding the lowest value of complicated functions. But that specialism can be rather useful, especially in engineering. D-Wave’s client list so far includes Google, NASA and Lockheed Martin, a big American weapons-maker.

D-Wave’s machine has caused controversy, especially among other quantum-computing researchers. For a while academics in the field even questioned that the firm had built a true quantum machine. Those arguments were settled in 2014, in D-Wave’s favour. But it is still not clear whether the machine is indeed faster than its non-quantum rivals.

D-Wave, based in Canada, is only one of many firms in the quantum-computing business. And whereas its machine is highly specialised, academics have also been trying to build more general ones that could attack any problem. In recent years they have been joined by some of the computing industry’s biggest guns, such as Hewlett-Packard, Microsoft, IBM and Google.

Quantum computing is a fundamentally different way of manipulating information. It could offer a huge speed advantage for some mathematical problems that still stump ordinary machines—and would continue to stump them even if Moore’s law were to carry on indefinitely. It is also often misunderstood and sometimes overhyped. That is partly because the field itself is so new that its theoretical underpinnings are still a work in progress. There are some tasks at which quantum machines will be unambiguously faster than the best non-quantum sort. But for a lot of others the advantage is less clear. “In many cases we don’t know whether a given quantum algorithm will be faster than the best-known classical one,” says Scott Aaronson, a computer scientist at the Massachusetts Institute of Technology. A working quantum computer would be a boon—but no one is sure how much of one.

The basic unit of classical computing is the bit, the smallest possible chunk of information. A bit can can take just two values: yes or no, on or off, 1 or 0. String enough bits together and you can represent numbers of any size and perform all sorts of mathematical manipulations upon them. But classical machines can deal with just a handful of those bit-strings at a time. And although some of them can now crunch through billions of strings every second, some problems are so complex that even the latest computers cannot keep pace. Finding the prime factors of a big number is one example: the difficulty of the problem increases exponentially as the number in question gets bigger. Each tick of Moore’s law, in other words, enables the factoring of only slightly larger numbers. And finding prime factors forms the mathematical backbone of much of the cryptography that protects data as they scoot around the internet, precisely because it is hard.

Quantum bits, or qubits, behave differently, thanks to two counterintuitive quantum phenomena. The first is “superposition”, a state of inherent uncertainty that allows particles to exist in a mixture of states at the same time. For instance, a quantum particle, rather than having a specific location, merely has a certain chance of appearing in any one place.

Wait for it

A pipeline of new technologies to prolong Moore’s magic

THE world’s IT firms spend huge amounts on research and development. In 2015 they occupied three of the top five places in the list of biggest R&D spenders compiled by PricewaterhouseCoopers, a consultancy. Samsung, Intel and Microsoft, the three largest, alone shelled out $37 billion between them. Many of the companies are working on projects to replace the magic of Moore’s law. Here are a few promising ideas.

Optical communication: the use of light instead of electricity to communicate between computers, and even within chips. This should cut energy use and boost performance Hewlett-Packard, Massachusetts Institute of Technology.Better memory technologies: building new kinds of fast, dense, cheap memory to ease one bottleneck in computer performance Intel, Micron.Quantum-well transistors: the use of quantum phenomena to alter the behaviour of electrical-charge carriers in a transistor to boost its performance, enabling extra iterations of Moore’s law, increased speed and lower power consumption Intel.Developing new chips and new software to automate the writing of code for machines built from clusters of specialised chips. This has proved especially difficult Soft Machines.Approximate computing: making computers’ internal representation of numbers less precise to reduce the numbers of bits per calculation and thus save energy; and allowing computers to make random small mistakes in calculations that cancel each other out over time, which will also save energy University of Washington, Microsoft.Neuromorphic computing: developing devices loosely modelled on the tangled, densely linked bundles of neurons that process information in animal brains. This may cut energy use and prove useful for pattern recognition and other AI-related tasks IBM, Qualcomm.Carbon nanotube transistors: these rolled-up sheets of graphene promise low power consumption and high speed, as graphene does. Unike graphene, they can also be switched off easily. But they have proved difficult to mass-produce IBM, Stanford University.

In computing terms, this means that a qubit, rather than being a certain 1 or a certain 0, exists as a mixture of both. The second quantum phenomenon, “entanglement”, binds together the destiny of a quantity of different particles, so that what happens to one of them will immediately affect the others. That allows a quantum computer to manipulate all of its qubits at the same time.

The upshot is a machine that can represent—and process—vast amounts of data at once. A 300-qubit machine, for instance, could represent 2300 different strings of 1s and 0s at the same time, a number roughly equivalent to the number of atoms in the visible universe. And because the qubits are entangled, it is possible to manipulate all those numbers simultaneously.

Yet building qubits is hard. Superpositions are delicate things: the slightest puff of heat, or a passing electromagnetic wave, can cause them to collapse (or “decohere”), ruining whatever calculation was being run. That is why D-Wave’s machine—and all other quantum computers—have to be so carefully isolated from outside influences. Still, progress has been quick: in 2012 the record for maintaining a quantum superposition without the use of silicon stood at two seconds; by last year it had risen to six hours.

Another problem is what to build the qubits out of. Academics at the universities of Oxford and Maryland, among others, favour tickling tightly confined ions with laser beams. Hewlett-Packard, building on its expertise in optics, thinks that photons—the fundamental particles of light—hold the key. Microsoft is pursuing a technology that is exotic even by the standards of quantum computing, involving quasi-particles called anyons. Like those “holes” in a semiconductor, anyons are not real particles, but a mathematically useful way of describing phenomena that behave as if they were. Microsoft is currently far behind any of its competitors, but hopes eventually to come up with more elegantly designed and much less error-prone machines than the rest.

Probably the leading approach, used by Google, D-Wave and IBM, is to represent qubits as currents flowing through superconducting wires (which offer no electrical resistance at all). The presence or absence of a current—or alternatively, whether it is circulating clockwise or anti-clockwise—stands for a 1 or a 0. What makes this attractive is that the required circuits are relatively easy to etch into silicon, using manufacturing techniques with which the industry is already familar. And superconducting circuits are becoming more robust, too.

Last year a team led by John Martinis, a quantum physicist working at Google, published a paper describing a system of nine superconducting qubits in which four could be examined without collapsing the other five, allowing the researchers to check for, and correct, mistakes. “We’re finally getting to the stage now where we can start to build an entire system,” says Dr Martinis.

A quantum computer can represent—and process—vast amounts of data at once

Using a quantum computer is hard, too. In order to get the computer to answer the question put to it, its operator must measure the state of its qubits. That causes them to collapse out of their superposed state so that the result of the calculation can be read. And if the measurement is done the wrong way, the computer will spit out just one of its zillions of possible states, and almost certainly the wrong one. “You will have built the world’s most expensive random-number generator,” says Dr Aaronson.

For a quantum algorithm to work, the machine must be manipulated in such a way that the probability of obtaining the right answer is continually reinforced while the chances of getting a wrong answer are suppressed. One of the first useful algorithms for this purpose was published in 1994 by Peter Shor, a mathematician; it is designed to solve the prime-factorising problem explained above. Dr Aaronson points out that alongside error correction of the sort that Dr Martinis has pioneered, Dr Shor’s algorithm was one of the crucial advances which persuaded researchers that quantum computers were more than just a theoretical curiosity. Since then more such algorithms have been discovered. Some are known to be faster than their best-known classical rivals; others have yet to prove their speed advantage.

A cryptographer’s dream

That leaves open the question of what, exactly, a quantum computer would be good for. Matthias Troyer, of the Swiss Federal Institute of Technology in Zurich, has spent the past four years conducting a rigorous search for a “killer app” for quantum computers. One commonly cited, and exciting, application is in codebreaking. Dr Shor’s algorithm would allow a quantum computer to make short work of most modern cryptographic codes. Documents leaked in early 2014 by Edward Snowden, a former American spy, proved what cryptographers had long suspected: that America’s National Security Agency (NSA) was working on quantum computers for exactly that reason. Last August the NSA recommended that the American government begin switching to new codes that are potentially less susceptible to quantum attack. The hope is that this will pre-empt any damage before a working quantum computer is built.

Another potential killer app is artificial intelligence (AI). Firms such as Google, Facebook and Baidu, China’s biggest search engine, are already putting significant sums into computers than can teach themselves to understand human voices, identify objects in images, interpret medical scans and so on. Such AI programs must be trained before they can be deployed. For a face-recognition algorithm, for instance, that means showing it thousands of images. The computer has to learn which of these are faces and which are not, and perhaps which picture shows a specific face and which not, and come up with a rule that efficiently transforms the input of an image into a correct identification.

Ordinary computers can already perform all these tasks, but D-Wave’s machine is meant to be much faster. In 2013 Google and NASA put one of them into their newly established Quantum AI Lab to see whether the machine could provide a speed boost. The practical value of this would be immense, but Dr Troyer says the answer is not yet clear.

In his view, the best use for quantum computers could be in simulating quantum mechanics itself, specifically the complicated dance of electrons that is chemistry. With conventional computers, that is fiendishly difficult. The 2013 Nobel prize for chemistry was awarded for the development of simplified models that can be run on classical computers. But, says Dr Troyer, “for complex molecules, the existing [models] are not good enough.” His team reckoned that a mixed approach, combining classical machines with the quantum sort, would do better. Their first efforts yielded algorithms with running times of hundreds of years. Over the past three years, though, the researchers have refined their algorithms to the point where a simulation could be run in hundreds of seconds instead.

It may not be as exciting as AI or code-breaking, but being able to simulate quantum processes accurately could revolutionise all sorts of industrial chemistry. The potential applications Dr Troyer lists include better catalysts, improved engine design, a better understanding of biological molecules and improving things like the Haber process, which produces the bulk of the world’s fertilisers. All of those are worthwhile goals that no amount of conventional computing power seems likely to achieve.

What comes next: Horses for courses

The end of Moore’s law will make the computer industry a much more complicated place

WHEN Moore’s law was in its pomp, life was simple. Computers got better in predictable ways and at a predictable rate. As the metronome begins to falter, the computer industry will become a more complicated place. Things like clever design and cunning programming are useful, says Bob Colwell, the Pentium chip designer, “but a collection of one-off ideas can’t make up for the lack of an underlying exponential.”

Progress will become less predictable, narrower and less rapid than the industry has been used to. “As Moore’s law slows down, we are being forced to make tough choices between the three key metrics of power, performance and cost,” says Greg Yeric, the chip designer at ARM. “Not all end uses will be best served by one particular answer.”

And as computers become ever more integrated into everyday life, the definition of progress will change. “Remember: computer firms are not, fundamentally, in it to make ever smaller transistors,” says Marc Snir, of Argonne National Laboratory. “They’re in it to produce useful products, and to make money.”

Moore’s law has moved computers from entire basements to desks to laps and hence to pockets. The industry is hoping that they will now carry on to everything from clothes to smart homes to self-driving cars. Many of those applications demand things other than raw performance. “I think we will see a lot of creativity unleashed over next decade,” says Linley Gwennap, the Silicon Valley analyst. “We’ll see performance improved in different ways, and existing tech used in new ways.”

Mr Gwennap points to the smartphone as an example of the kind of innovation that might serve as a model for the computing industry. Only four years after the iPhone first launched, in 2011, smartphone sales outstripped those of conventional PCs. Smartphones would never have been possible without Moore’s law. But although the small, powerful, frugal chips at their hearts are necessary, they are not sufficient. The appeal of smartphones lies not just in their performance but in their light, thin and rugged design and their modest power consumption. To achieve this, Apple has been heavily involved in the design of the iPhone’s chips.

And they do more than crunch numbers. Besides their microprocessors, smartphones contain tiny versions of other components such as accelerometers, GPS receivers, radios and cameras. That combination of computing power, portability and sensor capacity allows smartphones to interact with the world and with their users in ways that no desktop computer ever could.

Virtual reality (VR) is another example. This year the computer industry will make another attempt at getting this off the ground, after a previous effort in the 1990s. Firms such as Oculus, an American startup bought by Facebook, Sony, which manufactures the PlayStation console, and HTC, a Taiwanese electronics firm, all plan to launch virtual-reality headsets to revolutionise everything from films and video games to architecture and engineering.

A certain amount of computing power is necessary to produce convincing graphics for VR users, but users will settle for far less than photo-realism. The most important thing, say the manufacturers, is to build fast, accurate sensors that can keep track of where a user’s head is pointing, so that the picture shown by the goggles can be updated correctly. If the sensors are inaccurate, the user will feel “VR sickness”, an unpleasant sensation closely related to motion sickness. But good sensors do not require superfast chips.

The biggest market of all is expected to be the “internet of things”—in which cheap chips and sensors will be attached to everything, from fridges that order food or washing machines that ask clothes for laundering instructions to paving slabs in cities to monitor traffic or pollution. Gartner, a computing consultancy, reckons that by 2020 the number of connected devices in the world could run to 21 billion.

Never mind the quality, feel the bulk

The processors needed to make the internet of things happen will need to be as cheap as possible, says Dr Yeric. They will have to be highly energy-efficient, and ideally able to dispense with batteries, harvesting energy from their surroundings, perhaps in the form of vibrations or ambient electromagnetic waves. They will need to be able to communicate, both with each other and with the internet at large, using tiny amounts of power and in an extremely crowded radio spectrum. What they will not need is the latest high-tech specification. “I suspect most of the chips that power the internet of things will be built on much older, cheaper production lines,” says Dr Yeric.

Churning out untold numbers of low-cost chips to turn dumb objects into smart ones will be a big, if unglamorous, business. At the same time, though, the vast amount of data thrown off by the internet of things will boost demand for the sort of cutting-edge chips that firms such as Intel specialise in. According to Dr Yeric, “if we really do get sensors everywhere, you could see a single engineering company—say Rolls Royce [a British manufacturer of turbines and jet engines]—having to deal with more data than the whole of YouTube does today.”

Increasingly, though, those chips will sit not in desktops but in the data centres that make up the rapidly growing computing “cloud”. The firms involved keep their financial cards very close to their chests, but making those high-spec processors is Intel’s most profitable business. Goldman Sachs, a big investment bank, reckons that cloud computing grew by 30% last year and will keep on expanding at that rate at least until 2018.

The scramble for that market could upend the industry’s familiar structure. Big companies that crunch a lot of numbers, such as Facebook and Amazon, already design their own data centres, but they buy most of their hardware off the shelf from firms such as Intel and Cisco, which makes routers and networking equipment. Microsoft, a software giant, has started designing chips of its own. Given the rapid growth in the size of the market for cloud computing, other software firms may soon follow.

The twilight of Moore’s law, then, will bring change, disorder and plenty of creative destruction. An industry that used to rely on steady improvements in a handful of devices will splinter. Software firms may begin to dabble in hardware; hardware makers will have to tailor their offerings more closely to their customers’ increasingly diverse needs. But, says Dr Colwell, remember that consumers do not care about Moore’s law per se: “Most of the people who buy computers don’t even know what a transistor does.” They simply want the products they buy to keep getting ever better and more useful. In the past, that meant mostly going for exponential growth in speed. That road is beginning to run out. But there will still be plenty of other ways to make better computers.

Moore’s Law Keeps Going, Defying Expectations .

Personal computers, cellphones, self-driving cars—Gordon Moore predicted the invention of all these technologies half a century ago in a 1965 article for Electronics magazine. The enabling force behind those inventions would be computing power, and Moore laid out how he thought computing power would evolve over the coming decade. Last week the tech world celebrated his predictionhere because it has held true with uncanny accuracy—for the past 50 years.

It is now called Moore’s law, although Moore (who co-founded the chip maker Intel) doesn’t much like the name. “For the first 20 years I couldn’t utter the term Moore’s law. It was embarrassing,” the 86-year-old visionary said in an interview with New York Times columnist Thomas Friedman at the gala event, held at Exploratorium science museum. “Finally, I got accustomed to it where now I could say it with a straight face.” He and Friedman chatted in front of a rapt audience, with Moore cracking jokes the whole time and doling out advice, like how once you’ve made one successful prediction, you should avoid making another. In the background Intel’s latest gadgets whirred quietly: collision-avoidance drones, dancing spider robots, a braille printer—technologies all made possible via advances in processing power anticipated by Moore’s law.

Of course, Moore’s law is not really a law like those describing gravity or the conservation of energy. It is a prediction that the number of transistors (a computer’s electrical switches used to represent 0s and 1s) that can fit on a silicon chip will double every two years as technology advances. This leads to incredibly fast growth in computing power without a concomitant expense and has led to laptops and pocket-size gadgets with enormous processing ability at fairly low prices. Advances under Moore’s law have also enabled smartphone verbal search technologies such as Siri—it takes enormous computing power to analyze spoken words, turn them into digital representations of sound and then interpret them to give a spoken answer in a matter of seconds.

Another way to think about Moore’s law is to apply it to a car. Intel CEO Brian Krzanich explained that if a 1971 Volkswagen Beetle had advanced at the pace of Moore’s law over the past 34 years, today “you would be able to go with that car 300,000 miles per hour. You would get two million miles per gallon of gas, and all that for the mere cost of four cents.”

But Moore never thought his prediction would last 50 years. “The original prediction was to look at 10 years, which I thought was a stretch,” he told Friedman last week, “This was going from about 60 elements on an integrated circuit to 60,000—a 1,000-fold extrapolation over 10 years. I thought that was pretty wild. The fact that something similar is going on for 50 years is truly amazing.”

Just why Moore’s law has endured so long is hard to say. His doubling prediction turned into an industry objective for competing companies. “It might be a self-fulfilling law,” Denning explains. But it is not clear why it is a constant doubling every couple of years, as opposed to a different rate or fluctuating spikes in progress. “Science has mysteries, and in some ways this is one of those mysteries,” Denning adds. Certainly, if the rate could have gone faster, someone would have done it, notes computer scientist Calvin Lin of the University of Texas at Austin.

Many technologists have forecast the demise of Moore’s doubling over the years, and Moore himself states that this exponential growth can’t last forever. Still, his law persists today, and hence the computational growth it predicts will continue to profoundly change our world. As he put it: “We’ve just seen the beginning of what computers are going to do for us.”

Scientists make world’s thinnest transistor – at three atoms thick

-

In what could be the development that keeps Moore’s law plugging along, scientists in the US have produced a transistor that’s just a few atoms thick, opening up the possibility of some ridiculously tiny electronics.

First proposed in 1965, Moore’s law predicts that the overall processing power of computers will double every two years, and while it’s still pretty much accepted as a rule, people have started to doubt its longevity. Surely there’s a limit to how many transistors we can pack into an integrated circuit? Well, if there is, it looks like we haven’t hit it yet, because a team from Cornell University in the US have achieved a pretty significant record with their new tiny transistor.

The transistor is made using two-dimensional semiconductors known as transition-metal dichalcogenides (TMDs). When reduced to a single layer, these TMDs are just three atoms thick, made from members of a family of elements called transition metals. One of these, molybdenum disulfide, is a type of silvery, black metal that’s been touted for its superior electrical qualities over the past few years.

The team crystallised it down, and figured out how to peel ultra-thin sheets just a few atoms thick from the surface of the crystals. Amazingly, even at this thickness, the molybdenum disulfide film retained its electrical properties, which makes it a promising candidate for use in future electronics. “The electrical performance of our materials was comparable to that of reported results from single crystals of molybdenum disulfide, but instead of a tiny crystal, here we have a 4-inch (10-cm) wafer,” one of the team, Jiwoong Park, said in a press release.

To create this atoms-thick molybdenum disulfide film, the team used a technique called metal organic chemical vapour deposition (MOCVD), which involves starting with a powdered form of the material, converting that into a gas, and sprinkling single atoms onto a substrate, one layer at a time.

“The process starts with two commercially available precursor compounds – diethylsulfide and a metal hexacarbonyl compound – mixed on a silicon wafer and baked at 550 degrees Celsius for 26 hours in the presence of hydrogen gas,”explains Russell Brandom at The Verge. “The result was an array of 200 ultra-thin transistors with good electron mobility and only a few defects. Just two of them failed to conduct, leaving researchers with a 99 percent success rate.”

Publishing in Nature, the team says the next step is to figure out how to produce the film in a more consistent way, so the conductivity can be more accurately measured. But what they’ve achieved so far is a real step in the right direction.

“Lots of people are trying to grow single layers on this large scale, myself among them,” materials scientist Georg Duesberg from Trinity College Dublin in Ireland, who was not involved in the research, told Elizabeth Gibney at Nature. “But it looks like these guys have really done it.”

Computing after Moore’s Law

Fifty years ago this month Gordon Moore published a historic paper with an amusingly casual title: “Cramming More Components onto Integrated Circuits.” The document was Moore’s first articulation of a principle that, after a little revision, became elevated to a law: Every two years the number of transistors on a computer chip will double.

As anyone with even a casual interest in computing knows, Moore’s law is responsible for the information age. “Integrated circuits make computers work,” writes John Pavlus in “The Search for a New Machine” in the May Scientific American,“but Moore’s law makes computers evolve.” People have been predicting the end of Moore’s law for decades and engineers have always come up with a way to keep the pace of progress alive. But there is reason to believe those engineers will soon run up against insurmountable obstacles. “Since 2000 chip engineers faced with these obstacles have been developing clever workarounds,” Pavlus writes, “but these stopgaps will not change the fact that silicon scaling has less than a decade left to live.”

Faced with this deadline, chip manufacturers are investing billions to study and develop new computing technologies. In his article Pavlus takes us on a tour of this research and development frenzy. Although it’s impossible to know which technology will surmount silicon—and there’s good reason to believe it will be a combination of technologies rather than any one breakthrough—we can take a look at the contenders. Here’s a quick survey.

Graphene

One of the more radical moves a manufacturer of silicon computer chips could make would be to ditch silicon altogether. It’s not likely to happen soon but last year IBM did announce that it was spending $3 billion to look for alternatives. The most obvious candidate is—what else?—graphene, single-atom sheets of carbon. “Like silicon,” Pavlus writes, “graphene has electronically useful properties that remain stable under a wide range of temperatures. Even better, electrons zoom through it at relativistic speeds. And most crucially, it scales—at least in the laboratory. Graphene transistors have been built that can operate hundreds or even thousands of times faster than the top-performing silicon devices, at reasonable power density, even below the five-nanometer threshold in which silicon goes quantum.” A significant problem, however, is that graphene doesn’t have a band gap—the quantum property that makes it possible to turn a transistor from on to off.

Carbon Nanotubes

Roll a single-atom sheet of carbon into a cylinder and the situation improves: carbon nanotubes develop a band gap and, along with it, some semiconducting properties. But Pavlus found that even the researchers charged with developing carbon nanotube–based computing had their doubts. “Carbon nanotubes are delicate structures,” he writes. “If a nanotube’s diameter or chirality—the angle at which its carbon atoms are “rolled”—varies by even a tiny amount, its band gap may vanish, rendering it useless as a digital circuit element. Engineers must also be able to place nanotubes by the billions into neat rows just a few nanometers apart, using the same technology that silicon fabs rely on now.”

Memristors

Hewlett–Packard is developing chips based on an entirely new type of electronic component: the memristor. Predicted in 1971 but only developed in 2008, memristors—the term is a portmanteau combining “memory” and “resistor”—possess the strange ability to “remember” how much current previously flowed through it. As Pavlus explains, memristors make it possible to combine storage and random-access memory. “The common metaphor of the CPU as a computer’s ‘brain’ would become more accurate with memristors instead of transistors because the former actually work more like neurons—they transmit and encode information as well as store it,” he writes.

Cognitive Computers

To build chips “at least as ‘smart’ [as a] housefly,” researchers in IBM’s cognitive computing group are exploring processors that ditch the calculator like Von Neumann architecture. Instead, as Pavlus explains, they “mimic cortical columns in the mammalian brain, which process, transmit and store information in the same structure, with no bus bottlenecking the connection.” The result is IBM’s TrueNorth chip, in which five billion transistors model a million neurons linked by 256 million synaptic connections. “What that arrangement buys,” Pavlus writes, “is real-time pattern-matching performance on the energy budget of a laser pointer.”

Ohm Run: One-Atom-Tall Wires Could Extend Life of Moore’s Law.

WALKING A THIN LINE: Nanowires made from phosphorus atoms in silicon behave much like larger wires. Image: Courtesy of Bent Weber

There may be a bit more room at the bottom, after all.

In 1959 physicist Richard Feynman issued a famed address at a meeting of the American Physical Society, a talk entitled “There’s Plenty of Room at the Bottom.” It was an invitation to push the boundaries of the miniature, a nanotech call to arms that many physicists heeded to great effect. But more than 50 years since his challenge , researchers have begun to run up against a few hurdles that could slow the progression toward ever-tinier devices. Someday soon those hurdles could threaten Moore’s Law, which describes the semiconductor industry’s steady, decades-long progression toward smaller, faster, cheaper circuits.

One issue is that as wires shrink to just nanometers in diameter, their resistivity tends to grow, curbing their usefulness as current carriers. Now a team of researchers has shown that it is possible to fabricate low-resistivity nanowires at the smallest scales imaginable by stringing together individual atoms in silicon.

The group, from the University of New South Wales (U.N.S.W.) and the University of Melbourne in Australia, and from Purdue University in Indiana, constructed their wires from chains of phosphorus atoms. The wires, described in the January 6 issue of Science, were as small as four atoms (about 1.5 nanometers) wide and a single atom tall. Each wire was prepared by lithographically writing lines onto a silicon sample with microscopy techniques and then depositing phosphorus along that line. By packing the phosphorus atoms close together and encasing the nanowires in silicon, the researchers were able to scale down without sacrificing conductivity, at least at low temperatures.

“What people typically find is that below about 10 nanometers the resistivity increases exponentially in these [silicon] wires,” says Michelle Simmons, a U.N.S.W. physicist and a study co-author. But that appears not to be a problem with the new wires. “As we change the width of the wire, the resistivity remains the same,” she says.

Phosphorus is often introduced into silicon because each phosphorus atom donates an electron to the silicon crystal, which promotes electrical conduction or even can serve as bits in quantum computation schemes. But those conduction electrons can easily be pulled away from duty, especially in tiny wires where the wire’s exposed surface is large compared with its volume. By encasing the nanowires entirely in silicon, Simmons and her colleagues made the conduction electrons more immune to outside influence. “That moves the wires away from the surfaces and away from other interfaces,” Simmons says. “That allows the electron to stay conducting and not get caught up in other interfaces.”

Demonstrating electric transport in a wire so small “is quite an accomplishment,” says Volker Schmidt, a researcher at the Max Planck Institute of Microstructure Physics in Halle, Germany. “And being able to fabricate metallic wires of such dimensions, by this theoretically microelectronics-compatible approach, could be a potentially interesting route for silicon-based electronics.”

The wires, the researchers say, have the carrying capacity of copper, indicating that the technique might help microchips continue their steady shrinkage over time. The new finding might even extend the life of Moore’s Law, Arizona State University in Tempe electrical engineer David Ferry wrote in a commentary in Science accompanying the research.

But don’t expect to find atom-scale nanowires in your next gadget purchase. The technology is still in its early phase, with wire formation requiring atom-scale lithography with a scanning tunneling microscope. “It’s not an industry-compatible tool at the moment,” Simmons says.

Source:Scientific American.