by Sayer Ji

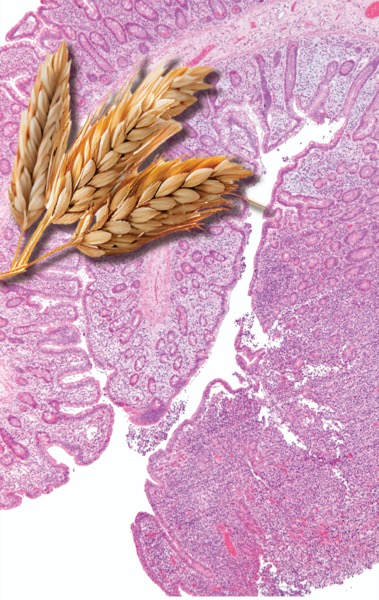

The globe-spanning presence of wheat and its exalted status among secular and sacred institutions alike differentiates this food from all others presently enjoyed by humans. Yet the unparalleled rise of wheat as the very catalyst for the emergence of ancient civilization has not occurred without a great price. While wheat was the engine of civilization’s expansion and was glorified as a “necessary food,” both in the physical (staff of life) and spiritual sense (the body of Christ), those suffering from celiac disease are living testimony to the

lesser known dark side of wheat. A study of celiac disease and may help unlock the mystery of why modern man, who dines daily at the table of wheat, is the sickest animal yet to have arisen on this strange planet of ours.

The Celiac Iceberg

Celiac disease (CD) was once considered an extremely rare affliction, limited to individuals of European descent. Today, however, a growing number of studies indicate that celiac disease is found throughout the world at a rate of up to 1 in every 100 persons, which is several orders of magnitude higher than previously estimated.

These findings have led researchers to visualize CD as an iceberg. The tip of the iceberg represents the relatively small number of the world’s population whose gross presentation of clinical symptoms often leads to the diagnosis of celiac disease. This is the classical case of CD characterized by gastrointestinal symptoms, malabsorption and malnourishment. It is confirmed with the “gold standard” of an intestinal biopsy. The submerged middle portion of the iceberg is largely invisible to classical clinical diagnosis, but not to modern serological screening methods in the form of antibody testing. This middle portion is composed of asymptomatic and latent celiac disease as well as “out of the intestine” varieties of wheat intolerance. Finally, at the base of this massive iceberg sits approximately 20-30% of the world’s population – those who have been found to carry the HLA-DQ locus of genetic susceptibility to celiac disease on chromosome 6.*

The “Celiac Iceberg” may not simply illustrate the problems and issues associated with diagnosis and disease prevalence, but may represent the need for a paradigm shift in how we view both CD and wheat consumption among non-CD populations.

First let us address the traditional view of CD as a rare, but clinically distinct species of genetically-determined disease, which I believe is now running itself aground upon the emerging, post-Genomic perspective, whose implications for understanding and treating disease are Titanic in proportion.

It Is Not In the Genes, But What We Expose Them To

Despite common misconceptions, monogenic diseases, or diseases that result from errors in the nucleotide sequence of a single gene are exceedingly rare. Perhaps only 1% of all diseases fall within this category, and Celiac disease is not one of them. In fact, following the completion of the Human Genome Project (HGP) in 2003 it is no longer accurate to say that our genes “cause” disease, any more than it is accurate to say that DNA alone is sufficient to account for all the proteins in our body. Despite initial expectations, the HGP revealed that there are only 20,000-25,000 genes in human DNA (genome), rather than the 100,000 + believed necessary to encode the 100,000 + proteins found in the human body (proteome).

The “blueprint” model of genetics: one gene → one protein → one cellular behavior, which was once the holy grail of biology, has now been supplanted by a model of the cell where epigenetic factors (literally: “beyond the control of the gene”) are primary in determining how DNA will be interpreted, translated and expressed. A single gene can be used by the cell to express a multitude of proteins and it is not the DNA alone that determines how or what genes will be expressed. Rather, we must look to the epigenetic factors to understand what makes a liver cell different from a skin cell or brain cell. All of these cells share the exact same 3 billion base pairs that make up our genome, but it is the epigenetic factors, e.g. regulatory proteins and post-translational modifications, that make the determination as to which genes to turn on and which to silence, resulting in each cell’s unique phenotype. Moreover, epigenetic factors are directly and indirectly influenced by the presence or absence of key nutrients in the diet, as well as exposures to chemicals, pathogens and other environmental influences.

In a nutshell, what we eat and what we are exposed to in our environment directly affects our DNA and its expression.

Within the scope of this new perspective even classical monogenic diseases like cystic fibrosis (CF) can be viewed in a new, more promising light. In CF many of the adverse changes that result from the defective expression of the Cystic Fibrosis Transmembrane Conductance Regulator (CFTR) gene may be preventable or reversible, owing to the fact that the misfolding of the CFTR gene product has been shown to undergo partial or full correction (in the rodent model) when exposed to phytochemicals found in turmeric, cayenne, and soybean Moreover, nutritional deficiencies of seleniun, zinc, riboflavin, vitamin e, etc. in the womb or early in life, may “trigger” the faulty expression or folding patterns of the CFTR gene in cystic fibrosis which might otherwise have avoided epigenetic activation. This would explain why it is possible to live into one’s late seventies with this condition, as was the case for Katherine Shores (1925-2004). The implications of these findings are rather extraordinary: epigenetic and not genetic factors are primary in determining disease outcome. Even if we exclude the possibility of reversing certain monogenic diseases, the basic lesson from the post-Genomic era is that we can’t blame our DNA for causing disease. Rather, it may have more to do with what we choose to expose our DNA to.

Celiac Disease Revisited

What all of this means for CD is that the genetic susceptibility locus, HLA-DQ, does not by itself determine the exact clinical outcome of the disease. Instead of being ‘the cause,’ the HLA genes may be activated as a consequence of the disease process. Thus, we may need to shift our epidemiological focus from viewing this as a classical “disease” involving a passive subject controlled by aberrant genes, to viewing it as an expression of a natural, protective response to the ingestion of something that the human body was not designed to consume.

If we view celiac disease not as an unhealthy response to a healthy food, but as a healthy response to an unhealthy food, classical CD symptoms like diarrhea may make more sense. Diarrhea can be the body’s way to reduce the duration of exposure to a toxin or pathogen, and villous atrophy can be the body’s way of preventing the absorption and hence, the systemic effects of chronic exposure to wheat.

I believe we would be better served by viewing the symptoms of CD as expressions of bodily intelligence rather than deviance. We must shift the focus back to the disease trigger, which is wheat itself.

People with celiac disease may actually have an advantage over the apparently non-afflicted because those who are “non-symptomatic” and whose wheat intolerance goes undiagnosed or misdiagnosed because they lack the classical symptoms and may suffer in ways that are equally or more damaging, but expressed more subtly, or in distant organs. Within this view celiac disease would be redefined as a protective (healthy?) response to exposure to an inappropriate substance, whereas “asymptomatic” ingestion of the grain with its concomitant “out of the intestine” and mostly silent symptoms, would be considered the unhealthy response insofar as it does not signal in an obvious and acute manner that there is a problem with consuming wheat.

It is possible that celiac disease represents both an extreme reaction to a global, species-specific intolerance to wheat that we all share in varying degrees. CD symptoms may reflect the body’s innate intelligence when faced with the consumption of a substance that is inherently toxic. Let me illustrate this point using wheat germ agglutinin (WGA), as an example.

WGA is classified as a lectin and is known to play a key role in kidney pathologies, such as IgA nephropathy. In the article: “Do dietary lectins cause disease?” the Allergist David L J Freed points out that WGA binds to “glomerular capillary walls, mesangial cells and tubules of human kidney and (in rodents) binds IgA and induces IgA mesangial deposits,” indicating that wheat consumption may lead to kidney damage in susceptible individuals. Indeed, a study from the Mario Negri Institute for Pharmacological Research in Milan Italy published in 2007 in the International Journal of Cancer looked at bread consumption and the risk of kidney cancer. They found that those who consumed the most bread had a 94% higher risk of developing kidney cancer compared to those who consumed the least bread. Given the inherently toxic effect that WGA may have on kidney function, it is possible that in certain genetically predisposed individuals (e.g. HLA-DQ2/DQ8) the body – in its innate intelligence – makes an executive decision: either continue to allow damage to the kidneys (or possibly other organs) until kidney failure and rapid death result, or launch an autoimmune attack on the villi to prevent the absorption of the offending substance which results in a prolonged though relatively malnourished life. This is the explanation typically given for the body’s reflexive formation of mucous following exposure to certain highly allergenic or potentially toxic foods, e.g. dairy products, sugar, etc? The mucous coats the offending substance, preventing its absorption and facilitating safe elimination via the gastrointestinal tract. From this perspective the HLA-DQ locus of disease susceptibility in the celiac is not simply activated but utilized as a defensive adaptation to continual exposure to a harmful substance. In those who do not have the HLA-DQ locus, an autoimmune destruction of the villi will not occur as rapidly, and exposure to the universally toxic effects of WGA will likely go unabated until silent damage to distant organs leads to the diagnosis of a disease that is apparently unrelated to wheat consumption.

Loss of kidney function may only be the “tip of the iceberg,” when it comes to the possible adverse effects that wheat proteins and wheat lectin can generate in the body. If kidney cancer is a likely possibility, then other cancers may eventually be linked to wheat consumption as well. This correlation would fly in the face of globally sanctioned and reified assumptions about the inherent benefits of wheat consumption. It would require that we suspend cultural, socio-economic, political and even religious assumptions about its inherent benefits. In many ways, the reassessment of the value of wheat as a food requires a William Boroughs-like moment of shocking clarity when we perceive “in a frozen moment….what is on the end of every fork.” Let’s take a closer look at what is on the end of our forks.

Our biologically inappropriate diet

In a previous article, I discussed the role that wheat plays as an industrial adhesive (e.g. paints, paper mache’, and book binding-glue) in order to illustrate the point that it may not be such a good thing for us to eat. The problem is implicit in the word gluten, which literally means “glue” in Latin and in words like pastry and pasta, which derives from wheatpaste, the original concoction of wheat flour and water which made such good plaster in ancient times. What gives gluten its adhesive and difficult-to-digest qualities are the high levels of disulfide bonds it contains. These same sulfur-to-sulfur bonds are found in hair and vulcanized rubber products, which we all know are difficult to decompose and are responsible for the sulfurous odor they give off when burned.

There will be 676 million metric tons of wheat produced this year alone, making it the primary cereal of temperate regions and third most prolific cereal grass on the planet. This global dominance of wheat is signified by the Food & Agricultural Organization’s (FAO) (the United Nation’s international agency for defeating hunger) use of a head of wheat as its official symbol. Any effort to indict the credibility of this “king of grains” will prove challenging. As Rudolf Hauschka once remarked, wheat is “a kind of earth-spanning organism.” It has vast socio-economic, political, and cultural significance. For example, in the Catholic Church, a wafer made of wheat is considered irreplaceable as the embodiment of Christ. .

Our dependence on wheat is matched only by its dependence on us. As Europeans have spread across the planet, so has this grain. We have assumed total responsibility for all phases of the wheat life cycle: from fending off its pests; to providing its ideal growing conditions; to facilitating reproduction and expansion into new territories. We have become so inextricably interdependent that neither species is sustainable at current population levels without this symbiotic relationship.

It is this co-dependence that may explain why our culture has for so long consistently confined wheat intolerance to categorically distinct, “genetically-based” diseases like “celiac.” These categorizations may protect us from the realization that wheat exerts a vast number of deleterious effects on human health in the same way that “lactose intolerance” distracts attention from the deeper problems associated with the casein protein found in cow’s milk. Rather than see wheat for what it very well may be: a biologically inappropriate food source, we “blame the victim,” and look for genetic explanations for what’s wrong with small subgroups of our population who have the most obvious forms of intolerance to wheat consumption, e.g. celiac disease, dermatitis herpetiformis, etc. The medical justification for these classifications may be secondary to economic and cultural imperatives that require the inherent problems associated with wheat consumption be minimized or occluded.

In all probability the celiac genotype represents a surviving vestigial branch of a once universal genotype, which through accident or intention, have had through successive generations only limited exposure to wheat. The celiac genotype, no doubt, survived through numerous bottlenecks or “die offs” represented by a dramatic shift from hunted and foraged/gathered foods to gluten-grain consumption, and for whatever reason simply did not have adequate time to adapt or select out the gluten-grain incompatible genes. The celiac response may indeed reflect a prior, species-wide intolerance to a novel food source: the seed storage form of the monocotyledonous cereal grasses which our species only began consuming 1-500 generations ago at the advent of the Neolithic transition (10-12,000 BC). Let us return to the image of the celiac iceberg for greater clarification.

Our Submerged Grain-Free Prehistory

The iceberg metaphor is an excellent way to expand our understanding of what was once considered to be an extraordinarily rare disease into one that has statistical relevance for us all, but it has a few limitations. For one, it reiterates the commonly held view that Celiac is a numerically distinct disease entity or “disease island,” floating alongside other numerically distinct disease “ice cubes” in the vast sea of normal health. Though accurate in describing the sense of social and psychological isolation many of the afflicted feel, the celiac iceberg/condition may not be a distinct disease entity at all.

Although the HLA-DQ locus of disease susceptibility on chromosome 6 offers us a place to project blame, I believe we need to shift the emphasis of responsibility for the condition back to the disease “trigger” itself: namely, wheat and other prolamine rich grains, e.g. barley, rye, spelt, and oats. Without these grains the typical afflictions we call celiac would not exist. Within the scope of this view the “celiac iceberg” is not actually free floating but an outcropping from an entire submerged subcontinent, representing our long-forgotten (cultural time) but relatively recent metabolic prehistory as hunters-and-gatherers (biological time), where grain consumption was, in all likelihood, non-existent, except in instances of near-starvation.

The pressure on the celiac to be viewed as an exceptional case or deviation may have everything to do with our preconscious belief that wheat, and grains as a whole are the “health foods,” and very little to do with a rigorous investigations of the facts.

Grains have been heralded since time immemorial as the “staff of life,” when in fact they are more accurately described as a cane, precariously propping up a body starved of the nutrient-dense, low-starch vegetables, fruits, edible seeds and meats, they have so thoroughly supplanted (c.f. Paleolithic Diet). Most of the diseases of affluence, e.g. type 2 diabetes, coronary heart disease, cancer, etc. can be linked to the consumption of a grain-based diet, including secondary “hidden sources” of grain consumption in grain-fed fish, poultry, meat and milk products.

Our modern belief that grains make for good food, is simply not supported by the facts. The cereal grasses are within an entirely different family: monocotyledonous (one leafed embryo) than that from which our body sustained itself for millions of years: dicotyledonous (two leafed embryo). The preponderance of scientific evidence points to a human origin in the tropical rainforests of Africa where dicotyledonous fruits would have been available for year round consumption. It would not have been monocotyledonous plants, but the flesh of hunted animals that would have allowed for the migration out of Africa 60,000 years ago into the northern latitudes where vegetation would have been sparse or non-existent during winter months. Collecting and cooking grains would have been improbable given the low nutrient and caloric content of grains and the inadequate development of pyrotechnology and associated cooking utensils necessary to consume them with any efficiency. It was not until the end of the last Ice Age 20,000 years ago that our human ancestors would have slowly transitioned to a cereal grass based diet coterminous with emergence of civilization. 20,000 years is probably not enough time to fully adapt to the consumption of grains. Even animals like cows with a head start of thousands of years, having evolved to graze on monocotyledons and equipped as ruminants with the four-chambered fore-stomach enabling the breakdown of cellulose and anti-nutrient rich plants, are not designed to consume grains. Cows are designed to consume the sprouted mature form of the grasses and not their seed storage form. Grains are so acidic/toxic in reaction that exclusively grain-fed cattle are prone to developing severe acidosis and subsequent liver abscesses and infections, etc. Feeding wheat to cattle provides an even greater challenge:

“Beef: Feeding wheat to ruminants requires some caution as it tends to be more apt than other cereal grains to cause acute indigestion in animals which are unadapted to it. The primary problem appears to be the high gluten content of which wheat in the rumen can result in a “pasty” consistency to the rumen contents and reduced rumen motility.”

(source: Ontario ministry of Agriculture food & Rural affairs)

Seeds, after all, are the “babies” of these plants, and are invested with not only the entire hope for continuance of its species, but a vast armory of anti-nutrients to help it accomplish this task: toxic lectins, phytates and oxalates, alpha-amalyase and trypsin inhibitors, and endocrine disrupters. These not so appetizing phytochemicals enable plants to resist predation of their seeds, or at least preventing them from “going out without a punch.”

Wheat: An Exceptionally Unwholesome Grain

Wheat presents a special case insofar as wild and selective breeding has produced variations which include up to 6 sets of chromosomes (3x the human genome worth!) capable of generating a massive number of proteins each with a distinct potentiality for antigenicity. Common bread wheat (Triticum aestivum), for instance, has over 23,788 proteins cataloged thus far. In fact, the genome for common bread wheat is actually 6.5 times larger than that of the human genome!

With up to a 50% increase in gluten content of some varieties of wheat, it is amazing that we continue to consider “glue-eating” a normal behavior, whereas wheat-avoidance is left to the “celiac” who is still perceived by the majority of health care practitioners as mounting a “freak” reaction to the consumption of something intrinsically wholesome.

Thankfully we don’t need to rely on our intuition, or even (not so) common sense to draw conclusions about the inherently unhealthy nature of wheat. A wide range of investigation has occurred over the past decade revealing the problem with the alcohol soluble protein component of wheat known as gliadin, the sugar-binding protein known as lectin (Wheat Germ Agglutinin), the exorphin known as gliadomorphin, and the excitotoxic potentials of high levels of aspartic and glutamic acid found in wheat. Add to these the anti-nutrients found in grains such as phytates, enzyme inhibitors, etc. and you have a substance which we may more appropriately consider the farthest thing from wholesome.

The remainder of this article will demonstrate the following adverse effects of wheat on both celiac and non-celiac populations: 1) wheat causes damage to the intestines 2) wheat causes intestinal permeability 3) wheat has pharmacologically active properties 4) wheat causes damage that is “out of the intestine” affecting distant organs 5) wheat induces molecular mimicry 6) wheat contains high concentrations of excitoxins.

1) WHEAT GLIADIN CREATES IMMUNE MEDIATED DAMAGE TO THE INTESTINES

Gliadin is classified as a prolamin, which is a wheat storage protein high in the amino acids proline and glutamine and soluble in strong alcohol solutions. Gliadin, once deamidated by the enzyme Tissue Transglutaminase, is considered the primary epitope for T-cell activation and subsequent autoimmune destruction of intestinal villi. Yet gliadin does not need to activate an autoimmune response, e.g. Celiac disease, in order to have a deleterious effect on intestinal tissue.

In a study published in GUT in 2007 a group of researchers asked the question: “Is gliadin really safe for non-coeliac individuals?” In order to test the hypothesis that an innate immune response to gliadin is common in patients with celiac disease and without celiac disease, intestinal biopsy cultures were taken from both groups and challenged with crude gliadin, the gliadin synthetic 19-mer (19 amino acid long gliadin peptide) and 33-mer deamidated peptides. Results showed that all patients with or without Celiac disease when challenged with the various forms of gliadin produced an interleukin-15-mediated response. The researchers concluded:

“The data obtained in this pilot study supports the hypothesis that gluten elicits its harmful effect, throughout an IL15 innate immune response, on all individuals [my italics].”

The primary difference between the two groups is that the celiac disease patients experienced both an innate and an adaptive immune response to the gliadin, whereas the non-celiacs experienced only the innate response. The researchers hypothesized that the difference between the two groups may be attributable to greater genetic susceptibility at the HLA-DQ locus for triggering an adaptive immune response, higher levels of immune mediators or receptors, or perhaps greater permeability in the celiac intestine. It is possible that over and above the possibility of greater genetic susceptibility, most of the differences are from epigenetic factors that are influenced by the presence or absence of certain nutrients in the diet. Other factors such as exposure to NSAIDs like naproxen or aspirin can profoundly increase intestinal permeability in the non-celiac, rendering them susceptible to gliadin’s potential for activating secondary adaptive immune responses. This may explain why in up to 5% of all cases of classically defined celiac disease the typical HLA-DQ haplotypes are not found. However, determining the factors associated greater or lesser degrees of susceptibility to gliadin’s intrinsically toxic effect should be a secondary to the fact that it is has been demonstrated to be toxic to both non-celiacs and celiacs.

2) WHEAT GLIADIN CREATES INTESTINAL PERMEABILITY

Gliadin upregulates the production of a protein known as zonulin, which modulates intestinal permeability. Over-expression of zonulin is involved in a number of autoimmune disorders, including celiac disease and Type 1 diabetes. Researchers have studied the effect of gliadin on increased zonulin production and subsequent gut permeability in both celiac and non-celiac intestines, and have found that “gliadin activates zonulin signaling irrespective of the genetic expression of autoimmunity, leading to increased intestinal permeability to macromolecules.”10 These results indicate, once again, that a pathological response to wheat gluten is a normal or human, species specific response, and is not based entirely on genetic susceptibilities. Because intestinal permeability is associated with wide range of disease states, including cardiovascular illness, liver disease and many autoimmune disorders, I believe this research indicates that gliadin (and therefore wheat) should be avoided as a matter of principle.

3) WHEAT GLIADIN HAS PHARMACOLOGICAL PROPERTIES

Gliadin can be broken down into various amino acid lengths or peptides. Gliadorphin is a 7 amino acid long peptide: Tyr-Pro-Gln-Pro-Gln-Pro-Phe which forms when the gastrointestinal system is compromised. When digestive enzymes are insufficient to break gliadorphin down into 2-3 amino acid lengths and a compromised intestinal wall allows for the leakage of the entire 7 amino acid long fragment into the blood, glaidorphin can pass through to the brain through circumventricular organs and activate opioid receptors resulting in disrupted brain function.

There have been a number of gluten exorphins identified: gluten exorphin A4, A5, B4, B5 and C, and many of them have been hypothesized to play a role in autism, schizophrenia, ADHD and related neurological conditions. In the same way that the celiac iceberg illustrated the illusion that intolerance to wheat is rare, it is possible, even probable, that wheat exerts pharmacological influences on everyone. What distinguishes the schizophrenic or autistic individual from the functional wheat consumer is the degree to which they are affected.

Below the tip of the “Gluten Iceberg,” we might find these opiate-like peptides to be responsible for bread’s general popularity as a “comfort food”, and our use of phrases like “I love bread,” or “this bread is to die for” to be indicative of wheat’s narcotic properties. I believe a strong argument can be made that the agricultural revolution that occurred approximately 10-12,000 years ago as we shifted from the Paleolithic into the Neolithic era was precipitated as much by environmental necessities and human ingenuity, as it was by the addictive qualities of psychoactive peptides in the grains themselves.

The world-historical reorganization of society, culture and consciousness accomplished through the symbiotic relationship with cereal grasses, may have had as much to do with our ability to master agriculture, as to be mastered by it. The presence of pharmacologically active peptides would have further sweetened the deal, making it hard to distance ourselves from what became a global fascination with wheat.

An interesting example of wheat’s addictive potential pertains to the Roman army. The Roman Empire was once known as the “Wheat Empire,” with soldiers being paid in wheat rations. Rome’s entire war machine, and its vast expansion, was predicated on the availability of wheat. Forts were actually granaries, holding up to a year’s worth of grain in order to endure sieges from their enemies. Historians describe soldiers’ punishment included being deprived of wheat rations and being given barley instead. The Roman Empire went on to facilitate the global dissemination of wheat cultivation which fostered a form of imperialism with biological as well as cultural roots.

The Roman appreciation for wheat, like our own, may have had less to do with its nutritional value as “health food” than its ability to generate a unique narcotic reaction. It may fulfill our hunger while generating a repetitive, ceaseless cycle of craving more of the same, and by doing so, enabling the surreptitious control of human behavior. Other researchers have come to similar conclusions. According to the biologists Greg Wadley & Angus Martin:

“Cereals have important qualities that differentiate them from most other drugs. They are a food source as well as a drug, and can be stored and transported easily. They are ingested in frequent small doses (not occasional large ones), and do not impede work performance in most people. A desire for the drug, even cravings or withdrawal, can be confused with hunger. These features make cereals the ideal facilitator of civilization (and may also have contributed to the long delay in recognizing their pharmacological properties).”

4) WHEAT LECTIN (WGA) DAMAGES OUR TISSUE.

Wheat contains a lectin known as Wheat Germ Agglutinin which is responsible for causing direct, non-immune mediated damage to our intestines, and subsequent to entry into the bloodstream, damage to distant organs in our body.

Lectins are sugar-binding proteins which are highly selective for their sugar moieties. It is believed that wheat lectin, which binds to the monosaccharide N-acetyl glucosamine (NAG), provides defense against predation from bacteria, insects and animals. Bacteria have NAG in their cell wall, insects have an exoskeleton composed of polymers of NAG called chitin, and the epithelial tissue of mammals, e.g. gastrointestinal tract, have a “sugar coat” called the glycocalyx which is composed, in part, of NAG. The glycocalyx can be found on the outer surface (apical portion) of the microvilli within the small intestine.

There is evidence that WGA may cause increased shedding of the intestinal brush border membrane, reduction in surface area, acceleration of cell losses and shortening of villi, via binding to the surface of the villi. WGA can mimic the effects of epidermal growth factor (EGF) at the cellular level, indicating that the crypt hyperplasia seen in celiac disease may be due to a mitogenic reponse induced by WGA. WGA has been implicated in obesity and “leptin resistance” by blocking the receptor in the hypothalamus for the appetite satiating hormone leptin. WGA has also been shown to have an insulin-mimetic action, potentially contributing to weight gain and insulin resistance.15 And, as discussed earlier, wheat lectin has been shown to induce IgA mediated damage to the kidney, indicating that nephropathy and kidney cancer may be associated with wheat consumption.

5) WHEAT PEPTIDES EXHIBIT MOLECULAR MIMICRY

Gliadorphin and gluten exporphins exhibit a form of molecular mimicry that affects the nervous system, but other wheat proteins effect different organ systems. The digestion of gliadin produces a peptide that is 33 amino acids long and is known as 33-mer which has a remarkable homology to the internal sequence of pertactin, the immunodominant sequence in the Bordetella pertussis bacteria (whooping cough). Pertactin is considered a highly immunogenic virulence factor, and is used in vaccines to amplify the adaptive immune response. It is possible the immune system may confuse this 33-mer with a pathogen resulting in either or both a cell-mediated and adaptive immune response against Self.

6) WHEAT CONTAINS HIGH LEVELS OF EXCITO-TOXINS

John B. Symes, D.V.M. is responsible for drawing attention to the potential excitotoxicity of wheat, dairy, and soy, due to their exceptionally high levels of the non-essential amino acids glutamic and aspartic acid. Excitotoxicity is a pathological process where glutamic and aspartic acid cause an over-activation of the nerve cell receptors (e.g. NMDA and AMPA receptor) leading to calcium induced nerve and brain injury. Of all cereal grasses commonly consumed wheat contains the highest levels of glutamic acid and aspartic acid. Glutamic acid is largely responsible for wheat’s exceptional taste. The Japanese coined the word umami to describe the extraordinary “yummy” effect that glutamic acid exerts on the tongue and palate, and invented monosodium glutamate (MSG) to amplify this sensation. Though the Japanese first synthesized MSG from kelp, wheat can also be used due to its high glutamic acid content. It is likely that wheat’s popularity, alongside its opiate-like activity, has everything to do with the natural flavor-enhancers already contained within it. These amino acids may contribute to neurodegenerative conditions such as multiple sclerosis, Alzhemier disease, Huntington’s disease, and other nervous disorders such as epilepsy, attention deficit disorder and migraines.

CONCLUSION

In this article I have proposed that celiac disease be viewed not as a rare “genetically-determined” disorder, but as an extreme example of our body communicating to us a once universal, species-specific affliction: severe intolerance to wheat. Celiac disease reflects back to us how profoundly our diet has diverged from what was, until only recently a grain free diet, and even more recently, a wheat free one. We are so profoundly distanced from that dramatic Neolithic transition in cultural time that “missing is any sense that anything is missing.” The body, on the other hand, cannot help but remember a time when cereal grains were alien to the diet, because in biological time it was only moments ago.

Eliminating wheat, if not all of the members of the cereal grass family, and returning to dicotyledons or pseudo-grains like quinoa, buckwheat and amaranth, may help us roll back the hands of biological and cultural time, to a time of clarity, health and vitality that many of us have never known before. When one eliminates wheat and fills the void left by its absence with fruits, vegetables, high quality meats and foods consistent with our biological needs we may begin to feel a sense of vitality that many would find hard to imagine. If wheat really is more like a drug than a food, anesthetizing us to its ill effects on our body, it will be difficult for us to understand its grasp upon us unless and until we eliminate it from our diet. I encourage everyone to see celiac disease not as a condition alien to our own. Rather, the celiac gives us a glimpse of how profoundly wheat may distort and disfigure our health if we continue to expose ourselves to its ill effects. I hope this article will provide inspiration for non-celiacs to try a wheat free diet and judge for themselves if it is really worth eliminating.